Sensation and Perception - The bottom-up approach

Introduction

Bottom-up? Top-down? Percepts and Neurons?

Chances are, if you have stumbled across this article then it is probably because you are either studying psychology, or you have a passing interest in cognition and are wondering what these things mean. Fear not, because this hub aims to provide the perfect starting point by introducing you to some of the key terms and concepts of perception within the realm of cognitive and neurological psychology. I have attempted to make this article as accessible yet academically thought-provoking as possible so as to give you both an insight and critical analysis of some of the key bottom-up theories of perception. I have also included a quiz at the end so you can test your knowledge, and will welcome any ratings or feedback you wish to give.

Whatever your reasons for being here, I hope you enjoy your visit and I hope you find this page useful in your pursuits.

Now, without further ado, let us put our bums in the air and begin a stimulating journey of the senses!

...No? No-one with me? Looks like it's just me and the Swan then...

Bottoms up!

Sensation and the 5 sensory modalities

Sensation refers to the act of experience via one of the five sensory modalities. These are vision (seeing), sound (Hearing), olfaction (smelling), gustation (tasting) and tactile (feeling). Sensation is directly relevant to perception because it is the means through which we gather basic information from the world around us, which we then perceive through a complicated process of sensory feedback and brain activity, resulting in a fully formed 'percept' of the experience. As an example, let us consider what happens during visual sensation.

As you look upon an object, electromagnetic light energy reflects off of it and enters the dark hole in the center of your eye called the pupil. The light then changes speed and direction - a process known as 'refraction' - and lands upon the retina at the back of the eye. The retina is essentially a group of neurons (brain cells) and other cells which carry this information from the eye to the brain. The electromagnetic energy is then transduced, or in other words converted, into electrochemical impulses by various photo-receptors within the retina. These impulses carry the information of the object to the brain via the neurons in the retina (Sternberg, 2009) - almost like a chain reaction (or set of dominoes falling in a line, if you will). The impulses eventually reach the occipital lobe within the primary visual cortex (Toates, 2007), located at the rearmost part of the brain, which results in the visual perception of the screen (the point at which you are able to 'see' the object).

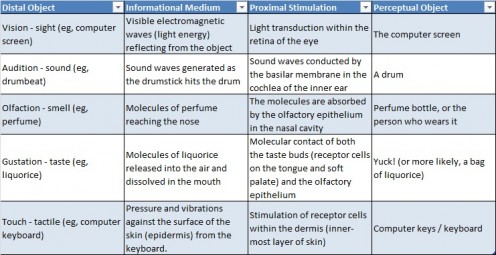

Table 1 - The processes of sensation and their links to perception

Sternberg (2009) categorizes each step in the process to make it easier to understand, based on the early perceptual theories of James Gibson (1966, 1979). In the above example, the object you are looking at would be the 'distal object' which exists within our environment and is the center of our sensation. The light energy reflecting from it is the 'informational medium' as it brings information of the object to the eye. The process that occurs within the eye upon receiving this information is called 'proximal stimulation' and the final perception of the object (the point at which we 'see' and recognize the object) is called the 'perceptual object' (also commonly referred to as the percept).

Bottom-up theories of perception - Templates, Prototypes and Feature-detection

What Does bottom-up and Top-down Mean?

Those concerned with the bottom-up approach to perception believe that all perception is simply the result of sensation and that no prior learning is necessary for us to perceive. On the contrary, the Top-down approach assumes that learning plays a vital part in our perception and that without prior experience of s stimulus it is impossible for us to really understand what it is. In reality we are more likely to incorporate elements of both of these theories when we perceive, however this article primarily focuses on the bottom-up approach, starting with the 'Template' theory.

Were you born knowing this was an Xbox logo?

The Template Theory

Throughout the years the bottom-up approach to perception in psychology has been well-documented and rigorously tested. Much of this stems from the works of J.J. Gibson (1904 - 1980) who held an extremist bottom-up view that we only require stimulation in one of the sensory modalities to allow us to perceive, meaning any higher top-down cognitive processes are not needed. Gibson argued that each of us is biologically tuned to respond to certain shapes from birth and no further learning is required for perception to occur. This is often referred to as a template theory and has further been developed by Selfridge and Neisser (1960) who believed that we are born with the knowledge of various templates which when combined allow us to perceive each and every object we encounter in the world.

Perceptual Constancy

- Size Constancy - You perceive that an object retains its size even when you are further away. An object in the distance appears smaller and less detailed, however you do not perceive that that object itself is literally smaller, merely that it is further away.

- Shape Constancy - You perceive that an object retains its overall shape even when you view it from a different angle. When looking at a cup with the handle to the right you are able to rotate it so the handle is now on the left. When you do this you do not perceive that the cup transformed for the handle to appear on the opposite side, instead you are able to perceive that the shape of the cup held constant and simply rotated on the spot.

To put this into context, imagine a cup from straight ahead with the handle on your right. According to the template theory you would already have the knowledge of a typical cup stored somewhere in your mind which would enable you to recognise when one is present. Not only this, but due to a phenomenon called 'perceptual constancy' you are able to recognise the object when the proximal stimulation is altered by changing your relative angle or distance to the object (note that size constancy and shape constancy are often referred to as separate elements and are outlined to the right). This means that you could flip the cup so the handle is now on the left and you would still recognise what it is, even though the visual input has changed.

The problem with this theory however is that it is too reductionist in its nature. For this to hold true you would need to already contain the knowledge of all things passed and all things yet to come. It fails to take learning into account as well as far more abstract ideas such as our perceptions of our own thoughts (called metacognition) or the knowledge of what an object is when you can only see it's outline. How are we able to recognise a company or product logo for example, and know it refers to something else altogether? Or how is it that children must learn to read if they are already born with the knowledge of all words and sentences?

Furthermore, the theory contradicts itself when you consider that the perception is simply a culmination of the sensory processes, yet changing the proximal stimulation does not alter the final perception of it even when viewed from a different angle. It could be argued that various templates exist for each object, however this is probably better explained by prototype and feature-detection theories.

The Prototype Theory

Rosch (1973) and Rosch (1975) proposed that rather than having a number of predefined templates within our minds, we instead categorise percepts by referencing prototypes. Prototypes are similar to templates in that they symbolise outlines or ideas of what an object should look like, however unlike templates which require an exact match, prototypes rely on best-guesses when various features are in place. To further put this into context, imagine again the cup from the template scenario. Now imagine another cup that takes on a slightly different shape. For template theories to hold true you would need to have separate templates for each and every variation of cup, otherwise you may encounter one that is unrecognisable. The prototype theory on the other hand suggests that we recognise objects through the presence of various shapes, patterns and features which commonly (in our experience) result in what we know a cup to be. This means the body of the cup does not have to be perfectly rectangular as long as most of the features (handle to the side, hole in center, etc) remain in place, and also means other features that are present can be overlooked, allowing the perception to remain constant. A prototype therefore is a representative, but not necessarily precise, idea of what a cup should look like and is far more flexible and realistic than a template would allow.

I like cups apparently

The problem with prototypes is that they fail to home in on the specifics of an object and also imply a degree of learning is involved through the acquisition of new prototypes when the bottom-up approach believes that only sensation is required. For example, how are we able to tell the difference between two cups of the same size and shape when each has a different pattern on the body? A prototype would be unable to differentiate the two because these features would be seemingly arbitrary in classifying the cup. In an attempt to combat these questions, bottom-up theorists instead turned their attention to feature-detection.

Feature-detection Theories

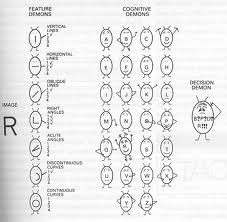

Sometimes referred to as 'feature-matching' theories, feature-detection suggests that we match features of objects to form an overall perception in a sort of hierarchical manner. By far one of the most popular of these theories is the 'pandemonium' model (Selfridge, 1959); most likely due to its amusing suggestion of metaphorical 'demons' desperately scampering around our minds while trying to solve the chaos of the incoming stimuli.

According to the pandemonium model there are 4 types of demons that work together to make up the process of perception. The first are the 'image demons', which capture the initial image of a stimulus in the retina and pass it to the 'feature demons'. These feature demons then 'call out' when a part or feature of the stimulus is recognised. As a very basic example, think about the letter 'L'. One could argue that this has 2 main features which combine to form the overall image. These are the straight, vertical line making the body of the letter, and a straight, horizontal line protruding outwards to the right from the base of the vertical one. The feature demons that correspond with these lines would then call out to let the 'cognitive demons' know they are present, and the cognitive demons would then cross-reference these features with any possible matches stored in memory. Finally, the 'decision demon' comes along and analyses the 'pandemonium' of all the shouting demons, picks out the cognitive demons that appear to be shouting the loudest, and combines the results to form the final percept of the stimulus.

According to Selfridge, everyone has their demons

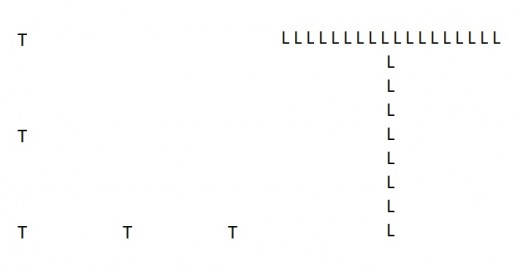

Feature-detection has also been expanded to identify 'local-precedence' (Martin, 1979) and 'global-precedence' (Navon, 1977) effects. A local-precedence effect occurs when local (smaller or unique) features are detected in an image, whereas global-precedence takes place when the features form a larger image or a wider outline is identified. To better demonstrate this effect, take a look at the below image. You will notice that the 'T' shapes on the left are spaced so far apart that they stand out more as individual letters, whereas the image to the right stands out more as a larger 'T' even though it is formed of lots of smaller 'Ls' put together. This is because the 'Ts' on the left trigger a local precedence effect where less detail causes the individual parts to stand out more, and the 'Ls' on the right trigger a global-precedence effect where more detail comes together to form a larger, overall image.

Local and global-precedence effects

Perhaps the most striking early evidence of feature detection comes from the research of Hubel & Wiesel, who won a nobel prize in 1981 for the discovery that the cells in the visual cortex (the area of your brain that primary handles vision) respond to "specifically oriented line segments" (Hubel & Wiesel, 1979, p. 9). They found that, by monitoring brain activity while participants looked at straight lines tilted at different angles, the cells in the brain either increased or decreased their activity accordingly. This activity was further altered by which section of the retina the light from the stimulus was reaching, meaning each neuron of the visual cortex can be mapped to a specific part of the retina allowing the brain to further differentiate between the locations and orientations of the lines.

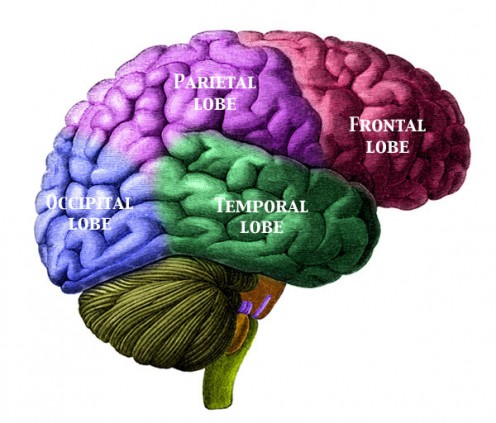

Indeed, it is not only straight line segments that the brain has been found to recognise, but there appear to exist "separate neural pathways in the cerebral cortex for processing different aspects of the same stimuli" (Sternberg, 2009, p. 109). The cerebral cortex is the outermost layer of the brain and appears to be concerned with such cognitive functions as memory, attention, thought and language (among others). In other words, it is the skin you see when observing the brain directly. The two pathways have been termed the 'what' and 'where' pathways. The 'what' pathway is responsible for identifying a stimulus, as well as recognising the shape and colour, whereas the 'where' pathway processes the location and movement of the stimulus. In the brain, the 'what' pathway is considered to descend from the occipital lobe (the main visual processing center of the visual cortex) toward the temporal lobes. The temporal lobes are located at the lower sides of the brain, directly in front of the occipital lobe. These are thought to be involved in the retention of memories from vision, as well as processing sensory input, emotion and deriving meaning from concepts and events. The 'where' pathway is also considered to start from the occipital lobe but ends in the parietal lob, which is situated directly above the temporal lobes. The parietal lobe integrates information from all the sensory modalities with an emphasis on spatial sense and navigation.

The cerebral cortex

As convincing as feature-detection theories sound however, care should be taken when understanding their results. For example, the pandemonium model provides yet another example of where a bottom-up theory brings in memory and prior learning to explain perception. If perception is simply the end result of sensation then what are our cognitive demons for? Furthermore, Hubel and Wiesel did not identify causality for the processes they witnessed, nor did their work identify how the brain is able to perceive shapes and objects other than straight line orientations. As their participants were all adults it is possible that the brain is able to learn to recognise other shapes in some way, or even that the neurons they observed had learned to fire in response to certain line orientations (this is due to a process called 'brain plasticity', however it is beyond the scope of this article). Finally, the 'what' and 'where' pathways link to other brain regions which have become known for their roles in memory and navigation. This means it is highly possible that the occipital lobe simply screens the visual input before sending it to the appropriate brain regions, which then act upon the information to formulate the final percept. Although this harkens back to the pandemonium model, it also further invalidates it because if the occipital lobe was the home of the feature demons, then how are they able to recognise which lobes to send the information to considering their only role is to recognise features? Additionally, if the parietal and temporal lobes were considered to be home to the cognitive demons, then where does the decision demon live and how is it able to tell the difference between the cognitive demons in each brain region?

The more likely story is that the brain is a complex organ which simultaneously processes various sensory inputs, as well as a multitude of internal processes related to memories, thoughts and emotions (Martin, 2006) . It is through combining and understanding these parallel yet interconnected processes that psychology aims to better understand the brain and all its functions.

Links to follow me

- Google+

enpsychlopaedia, the psychological encyclopaedia - This page aims to bring you inspiring and entertaining psychology news and articles, as well as links to original writing from our HubPages profile. - Facebook

Enpsychlopaedia brings psychology to those with a passion for science. Through news, research, humor and original writing, we aim to make psychology an accessible and fun topic to learn. Enpsychlopaedia - the psychological encyclopaedia. - Twitter

The latest from enpsychlopaedia (@enpsychlopaedia). Bringing psychology to those with a passion to learn through online articles and up-to-date news on psychology

Thank you for reading

I hope you enjoyed reading this article, and I sincerely hope it was informative and useful. As this is my first of many planned articles I welcome your feedback and suggestions if you feel it can be improved.

I have also included links to my Twitter and Facebook pages where I will publish links to new hubs and other interesting articles and would love it if you wish to follow them.

If you like this article, don't forget to rate it and leave a comment below.

Also, don't forget to take the quiz!

Test your knowledge

view quiz statisticsReferences

Gibson, J. J. (1966). The senses considered as perceptual systems. New York: Houghton Mifflin.

Gibson, J. J. (1979). The ecological approach to visual perception. Boston: Houghton Mifflin.

Hubel, D., & Wiesel, T. (1979). Brain mechanisms of vision. Scientific American, 241, 150-162.

Martin, M. (1979). Local and global processing: The role of sparsity. Memory & Cognition, 7, 476-484.

Martin, N. G. (2006). Human Neuropsychology, 2nd edition, Harlow, England: Pearson Education Limited.

Navon, D. (1977). Forest before trees: The precedence of global features in visual perception. Cognitive Psychology, 9, 353-383.

Rosch, E.H. (1973). Natural categories. Cognitive Psychology, 4, 328–50.

Rosch, R.H (1975). Cognitive reference points. Cognitive Psychology, 7 (4), 532–47.

Selfridge, O. G. (1959). Pandemonium: A paradigm for learning. In D.V. Blake & A. M. Uttley (Eds.), Proceedings of the Symposium on the Mechanization of Thought Processes (pp. 511-529). London: Her Majesty's Stationary Office.

Selfridge, O. G., & Niesser, U. (1960). Pattern recognition by machine. Scientific American, 203, 60-68.

Sternberg, R. J (2009). Cognitive Psychology, Belmont: Wadsworth.

Toates, F. M (2007). Biological Psychology, 2nd edition, Harlow, England: Pearson Education Limited.