Comparative Analysis of Hardware and Software ILP Techniques

Abstract

This paper explores the depths of ILP techniques, dividing the topic into symmetrical discussions of software and hardware techniques. What makes this paper worth its time in reading effort is the structure and method of broaching the subject. It explores the hardware ILP methods and symmetrically, in the same position in the software section describes a parallel method making it extremely easy to locate contending methods in both hardware and software. We conclude the software methods can match hardware methods in fighting the limitations on ILP but face a great handicap when it comes to the metrics of design compatibility and market success.

I. Introduction

ILP is the acronym that stands for Instruction Level Parallelism. Put simply, ILP is the ability to evaluate instructions in parallel. The trick is to be able to overlap as many instructions as possible while maintaining precise exceptions. The introduction of instruction level parallelism (ILP) helped increase the throughput of pipelines. Yet, ILP techniques are naturally restricted but regular ceilings that limit pipelines like the number or pipeline stages, exceptions and how many instructions can be executed in parallel without stalls assuming we have enough resources in a program with respect to the dependent instructions that must be executed in order. Another major limitation is the delay due to data transfer across the chip. ILP techniques could be divided into two major techniques. The first describes methods used for improving hardware ILP while the second describes ILP methods utilizing software methods for ILP improvement. This paper describes those two methods and demonstrates through examples of these techniques studied in the computer architecture class EECE421.

II. Limits and Challenges of ILP

Most computer architectures are use a sequential model when executing instructions, relying primarily on a program counter that indicates which instruction is to be issued next [3]. This program counter is supposed to keep program issue in order when in fact it clashes with the system’s ability to have a precise exception. The best way to describe a precise exception is a conveyor belt on a production line. The instructions in the machine are bottles moving from one station –pipeline stage- to another. It is expected that when bottle –instruction- jams, the bottles before have not been processed but the current station the jammed bottle is in while the bottles that have already passed that station before the bottle jammed should be perfectly fine. In the light of this analogy, we need to emphasize the importance of keeping track of the location of the interrupt –jammed bottle- and the current state of the registers and memory.

To highlight what interrupts are would be the next smart move. An interrupt is a signal that informs the system that an illegal even occurred. The system can then either ignore it or proceed to take action as its specific protocol indicates. We can also divide interrupts in another way instead of precise and imprecise. Interrupts can be divided into two classes: Program interrupts –traps- and external interrupts. Program interrupts are mainly caused by software errors nominally exceptions caused by instruction fetching and decoding. External interrupts are as the name implies caused by external sources like I/O interrupts [1].

One reason why an interrupt could occur is if a real data dependence –RAW dependence- occurred. A RAW is the most common type of data hazard that is usually treated by stalling. It occurs when a data location that has not been written yet is being attempted to be read thus making it not possible for these two instructions of chain of instruction to be executed in parallel let alone simultaneously. When dealing with a data hazard of this type, it is important to maintain program order to insure that the read is executed after the proper write. This is where dynamic scheduling becomes an issue at hand. In order to maintain proper program order while maintaining that there is enough time for an instruction to write data before the read instruction is executing –avoiding RAW data hazards-, dynamically scheduled processors rely on dynamic memory disambiguation. Memory disambiguation simply put is the maintenance of a certain order between loads and their corresponding stores in order to implement a program correctly especially if it running instructions in parallel. To do that, we have to have a sort of a method to know all the previous stores that have not yet been retired when a load is being executed.

There are other types of hazards generally called false dependences. The first is called an anti-dependence or WAR –write after read- hazard. It happens when a memory location is read before the proper write instruction got to write that location resulting in reading the old data not meant for that specific round of reads. The other type of hazards is called an output dependence or WAW –write after write – dependence. It happens when a data location written twice or overwritten by other writes leaving no room for whatever read that wanted the previous data to be achieved. This kind of hazard only occurs in pipelines that can write more that on instruction at a time or allow an instruction to precede or skip a stalled instruction before it [2]. A totally different type of dependence is control dependence. It happens when instructions have to be ordered with respect to a branch; and generally any other kind of instruction that changes the PC. This makes the task of parallelizing instruction more complicated because even with frequently taken not-taken branches, a certain order of instructions has to be maintained to avoid control hazards making taking instructions from different simple blocks almost impossible since even though we may be attempting to change instruction order, it is hard to do so in the presence of control dependences without affecting how the exception is being raised and causing delays.

Another reason for delays specifically unexpected ones is cache misses. Cache misses happen when a request to read cannot be satisfied by the cache and thus has to be moved to a higher level memory. The fact that we are not sure if the requested data is in the cache or not is what makes cache misses unpredictable and makes us favor smaller programs and encourage greater ILP exploitation

![Figure 1: The basic structure of a MIPS floating-point unit using Tomasulo’s algorithm [1] Figure 1: The basic structure of a MIPS floating-point unit using Tomasulo’s algorithm [1]](https://usercontent1.hubstatic.com/5577220_f520.jpg)

![Figure 2: The 2-bit branch predictor [1] Figure 2: The 2-bit branch predictor [1]](https://usercontent1.hubstatic.com/5577226_f520.jpg)

![Figure 3: Global branch predictor with indexing [2] Figure 3: Global branch predictor with indexing [2]](https://usercontent1.hubstatic.com/5577230.png)

III. HARDWARE METHODS FOR EXPLOITING ILP

Not all instructions need the same delay to go through all pipeline stages making it important to have pipeline registers to synchronize individual cycles and cycle stages so that data from different cycles and cycle stages do not get mixed up. The usage of the pipelining technique results in better CPI and thus good speedup close to a multiple of the number of pipeline stages. Yet, the side effect is the increase in power consumption, the need to add more hardware and a bigger cost for a branch misprediction. Instead of maintaining sequential execution out-of-order execution could be an option. Another name for this kind of execution is dynamic execution. To preserve the sequentially of execution, many methods have been devised like the re-order buffer, the history buffer and the future file. A re-order buffer is useful when instructions are allowed to finish out of order. It reorders these instructions before they modify the process state. A history buffer is a buffer that systematically stores exception reports using tags. When using this method, out-of-order writes are allowed only the history of all the register values is stored in the history buffer. When an exception occurs, all instructions are reset using the values from the history buffer. This could take a long time if the history buffer is big the restoration of the system to its previous condition may take as long as the history buffer itself. A compromise from the history buffer is the future file. It uses a re-order buffer and two register files but restores the instructions upon an exceptions from one of them –architectural file- only.

A.Real data dependences

In a normal pipeline, the simplest way to deal with a RAW is through bypassing data from the ALU to the pipeline stage that needs the data thus meaning more hardware additions. An alternate method is to use a reservation station –RS- like in Tomasulo’s (Figure 1) Algorithm. In Tomasulo’s algorithm a reservation station is placed at the input of every stage. A reservation station basically extends the register set in this algorithm. Its basic role is to provide operands to the instructions and insure that an instruction exits it only if complete with all its operands. Tomasulo’s algorithm even insures that instructions dependent on previous execution results find the appropriate operand by snooping the CDB –common data bus-and identifying these specific results through tags given earlier upon entry into the RS.

B.Memory disambiguation

It is quite straight forward to address memory disambiguation through hardware ILP techniques. All that has to be done is make sure that the instructions that are dependent maintain a specific order throughout their trip inside the pipeline and all the way out of it. This is done by putting loads and stores in specific order inside a queue. The nature of a queue itself insures that the instruction first inserted is allowed to exit first. A common structure that is in queue form and helps the memory disambiguation aspect is the RS and its employed tags mentioned earlier. The same architecture that uses the RS uses Store and Load buffers. Load and store buffers hold either data or addresses to and from memory. They are extra registers for storage and are similar in structure to RS and act like it only they handle loads and stores also utilizing tags and operating in a queue fashion. This is done by maintaining program order between loads and stores by utilizing data address calculation and store to load forwarding. It means that the proper data is in the store buffer is forwarded to the proper data in the load buffer by using the matching tags associated with the instructions and computing the proper address of the store in question. Similar to other instructions in the RS waiting for operands, loads and stores also wait for executed instructions matching the tag.

C.False data dependences

When it comes to false data dependences, the hardware approach is to avoid them all together. This is done via techniques such as register renaming. I will discuss two methods to approaching that. The first is through register windows that are register banks that allocate several registers for one variable so its present and all its past values –to a point- are available such that when a specific value of variable is always present and its retrieval is only a matter of moving a pointer through the register window. The second method is utilized in Tomasulo’s algorithm. It corresponds to renaming results using reservation station numbers.

D.Control hazards

Control hazards could be one of the most complicated dependencies to resolve. Mainly, control hazards are resolves using methods of prediction. Branch prediction put in layman terms is the prediction of a branch’s outcome before the actual outcome is computed. There several kinds of predictors utilized but they all rely on two variables:

1-History stored of previous branches or loops

2-Program counter (PC)

The difference is in which of the two is utilized to do the actual predicting. The Bimodal branch predictor simply relies on PC for prediction. A counter is incremented if the branch is actually predicted and decremented otherwise. Whether or not the prediction is correct or not is not an issue.

Local branch prediction spices the prediction up by adding branch history to the equation.

Global branch predictors simply rely on a shift register –GR- that record the last direction taken by the branch. Global predictors with indexing (figure 2) not only use GR but also add PC to keep an index like the one used in a bimodal predictor.

A combined predictor basically is a predictor that predicts which predictor to use in case of a specific branch case

E. Frequent branches and limited ILP in basic blocks

Frequent branches are a common thing when executing a programs especially one heavy on loops. In such cases, branch speculation could save a lot of pipelining time. The idea of speculation is to actually predict a branch outcome and assume the prediction is correct and go ahead with the program before the actual branch direction is computed. Although this technique saves a lot of time, the price of a branch misprediction is quite in issue here. All the instructions executed in the wrong branch direction have to have their effects undone and the PC decremented. This brings again the issue of the need for a history buffer and/or a future file.

![Figure 4: Major types of compiler optimizations [1] Figure 4: Major types of compiler optimizations [1]](https://usercontent2.hubstatic.com/5577255.png)

IV. SOFTWARE METHODS FOR EXPLOITING ILP

Software methods for exploiting ILP are exactly as the name says, methods that utilize programming techniques to enhance pipelining and increase throughput. Software methods were used in previous architectures like the VLIW –very long instruction word- architecture. It is a general characteristic of these kinds of methods to be highly dependent on the compiler.

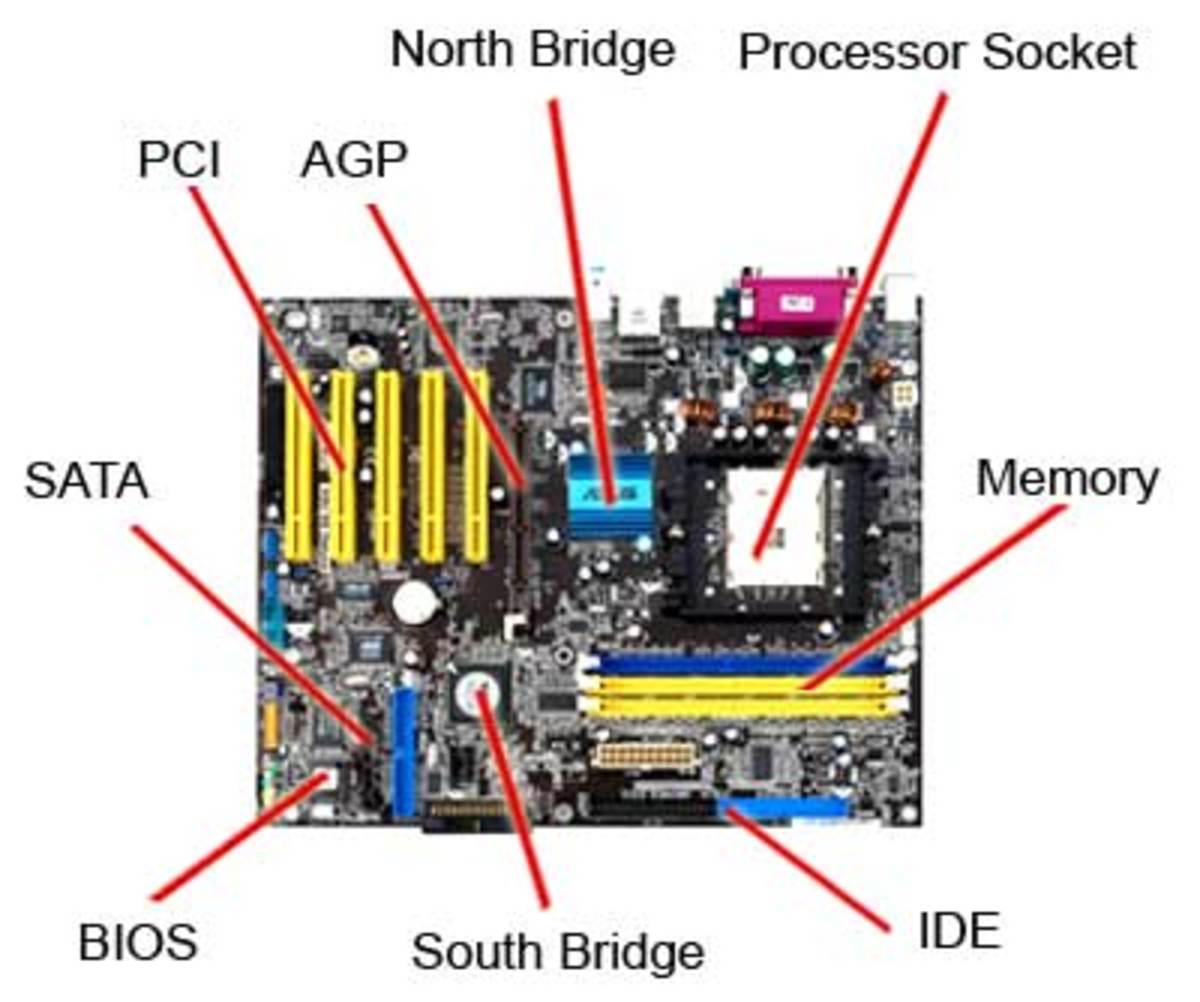

Software methods have a totally different approach to dealing with the issue of sequentiallity of architectures in comparison to hardware methods.These techniques are mainly compiler oriented. The compiler is used to detect and schedule tasks that are in independent while execution that is mainly hardware-dependent is left to be sequential. Aside from that, the compiler performs the task of big hardware like history buffers and future files simply by adding recovery code that checks for the validity of an exception and makes sure that compiler choices can be undone. Figure 3 is a table that shows some compiler-type optimizations and their relative frequency of occurrence.

A. Real data dependences

A technique commonly used for software ILP is copy propagation. Copy propagation relies on the fact that many variables or operands are reused in many other instructions or locations [1]. Copy propagation replaces all instances of a variable by their assigned value. Since recurrence embodied in loops is a major handicap in avoiding real data dependences, compilers simplify or eliminate array addressing and calculation within loops. They do that through scheduling and loop unrolling. Scheduling independent instructions dependent time-consuming instructions gives them time to execute thereby avoiding RAW hazards. Similarly, unrolling loops allows hidden RAW dependencies within a loop to be located and dealt with. Put in other words, loops have great overhead. The compiler, when faced with small loops, can decide to take the loop and remove the branch, thus executing as if the branch is a regular instruction. Another method is splitting the loop at static time making more instructions execute on parallel.

B. Memory disambiguation

The same techniques that protect the architecture from RAW dependences also cause memory disambiguity. Loop splitting and unrolling help insure that dependent loads and stores are in their proper sequence when it comes to static compiler work. In regards to dynamic compiler work, it is a totally different issue to protect the memory from ambiguity. The computer can assume dependence and work from there, or it can assume independence and then try install recovery code after an exception is sensed. The Itanium architecture uses special instructions but leaves the check if the dependence or independence assumption is correct or not to the hardware.

C. False data dependences

When dealing with WAR and WAW hazards using software techniques, the compiler while performing loop unrolling or instruction re-order resorts to renaming registers. By this we notice that renaming using compiler is similar in technique to renaming using register windows

D. Control hazards

Controlling branch induced control hazards is done through scheduling and loop unrolling. Scheduling independent instructions between branch calls and the actual branching gives the branch time to compute the proper branch direction.

E. Frequent branches and limited ILP in basic blocks

Loop unrolling is a good method to identify frequent branches because loops are a very common place to find frequent branches. The dependencies that relate some instructions within a loop limit how much pipelining his possible within a single block. The unrolling or loops and global scheduling organize instructions within a pipeling cycle such that no dependencies are disturbed at the same time independent instruction from other loops are executed instead of wasting resources on NOPs. Also, the utilization of speculation is important here because having a load instruction before a branch can in many cases cause an exception. Thus in the IA-64 architecture, speculative loads are instructions that retrieve data from the memory while the compiler performs exception detection. A check instruction is placed in the location of the original load and if an exception occurs when executed moves to recovery code.

V. COMPARING HARDWARE & SOFTWARE METHODS FOR EXPLOITING ILP

When comparing two major methods of ILP implementation, it is important to discuss the following:

- Performance

- Power

- Area

- Binary Compatibility

- Design Compatibility

- Market access

Performance wise, we find as in the example of the VLIW software ILP methods are quite impressive with respect to hardware methods but power wise, both hardware addition and adding recovery code after branch misprediction and code for loop unrolling are extremely power consuming. Area wise and binary compatibility wise software methods have the upper hand because heavy compiler reliance and optimization decrease hardware area and software methods allows for better usage due to variable pipeline width unlike the fixed pipeline width that has to make do with using NOPs when no independent instructions are parallelizable anymore. It is quite handy that in software ILP techniques the pipeling width is variable and also more binary compatible.

Talking about design compatibility and market success, the hardware techniques get the upper hand since common market machines are designed for hardware ILP more that software and the actual production of VLIW-like machines was a failing experiment.

VI. CONCLUSION

Both techniques address all the discussed limits on ILP, yet we see that hardware methods specialize in eradicating special limits while software specializes in others. We see that with only a few software concepts we are able to match hardware methods, but we fail in some aspects. Software methods fail when it comes to design compatibility and market access.

REFERENCES

[1] Hennessy Patterson, “Computer Architecture, a quantitative approach”, 4th ed.

[2] Scott McFarling, “Combining Branch Predictors”

[3] James E. Smith, “Implementation of Precise Interrupts in Pipelined Processors”