- HubPages»

- Technology»

- Internet & the Web»

- Search Engines

Googlecracy: the Web in the Days of Google

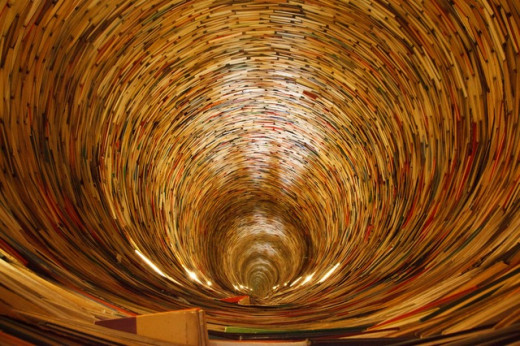

The Web, similar to the Universe, is a big and almost unexplored agglomerate of sites and, similar to what the Universe is supposed to do, it is expanding at a speed which nobody can estimate exactly. If you try to determine how many pages are on the web, you will immediately perceive that the answer is not an easy matter and will find that statistics about this question are contradictory. An estimate says one trillion pages, but every estimate risks to be early surpassed, because the number of pages grows at a rate that nobody can really imagine. The Web is by far the largest collection of information that humanity has ever seen.

On the other hand, the number of users on the Internet is estimated to be more than two billions. So, the way this large public can access the big, disorganized mass of the information is crucial. The need of search engines has been evident since the dawn of the Internet. But the enormous power gained by search engines in addressing the traffic towards the sites deserves some considerations.

The Unstoppable Rise of Google

In the prehistoric date of 1998, the Net was still in his infancy. The social networks had not been invented yet and the most used search engine was Altavista. People who, like me, have some years on their shoulders, remember well the Altavista’s page crowded of channels, overlooked by the search bar.

The appearance of the clean page of Google was a revolution. As it is well known, Google’s greatest innovation was to consider the number of links pointing to a page as a significant parameter of its ranking. However, in my opinion the success of Google was determined especially by two less technical factors: the essential “look and feel” of his page and the lucky name, easy to remember and to type on the keyboard (it seems to have originated from the word “googol”, the number one followed by 100 zeros).

Counting the links may even have been an innovative idea, useful to deliver better results, but it has also given life to the shameless market of links which everyone on the Internet knows and that generally has little or nothing to do with the quality of a site. Really, any method used to evaluate a site must remain secret, to be really effective. But Google, which nowadays keeps secret the 200 parameters they say to use to evaluate a site, at that times had an interest to inform about its innovative method, because in this way it could appear innovative and attract more visitors.

Anyway, in a few years Altavista has declined (it has now completely disappeared) and Google has become the absolute dominator of the Internet’s searches. It is a company employing 46,000 people, with a yearly revenue of 50 billions dollars. It is the fifth most valuable brand in the world, according to the Forbes’ ranking. It is the most visited site in the world and controls the 80% of the search traffic (67% in US). To serve an average of five billion searches per day it has an infrastructure estimated in more than 1,000,000 servers, hosted in ten data centres around the world. No doubt that this is a monopoly. When you search a keyword on the Internet, eight times out of ten it is Google which determines the page you will access to and, if you create a page, its success lies in the hands of Google.

Forgetful of how Google had succeeded on his rival, the competitors, such as Yahoo! or Bing, have uniformed to the Google’s model, adopting substantially the same style. This does not mean necessarily that Google does not do its best to provide the users with good results. However, there are some factors, in the way Google and the other search engines work, which strongly condition the Web’s behaviour. This is what I mean with the term Googlecracy: Google conditions both the behaviour of the authors who publish content on the Internet and the experience of users searching the content.

Googlebot

How Search Engines Affect the Content on the Internet

As everyone knows, search engines use algorithms to determine how well a page satisfies the keywords searched. So a page, to be found by the users, needs to fit the parameters of the algorithm better than the other pages do. The algorithms used by search engines obviously are kept secret, nevertheless a specific discipline called SEO (Search Engine Optimization) studies how to build a page to obtain the best results with search engines. Since most of researches pass through Google, the experts of this discipline mostly regard to Google and give their masterly recipes to climb the Google’s ranking. They reminds me the priests of a religion devoted to the god Google, always ready to listen the oracles of the god (the various Google’s search managers) who occasionally pronounce some cryptic words. They translate these words in prescriptions to the mass of the faithful worshipers, i.e. the mass of people who desperately try to get some money scarifying to the god, i.e. struggling to put content on the Internet. In fact, every time the oracles speak and announce a new resolution of the god (for example the last Hummingbird update) the mass of worshipers fuss and fear the moods of the god who continuously changes its mind.

I am kidding, but the true result of all this is that the authors are generally more concerned with the techniques to rank well rather than the quality of their articles and they worry of getting links rather than infusing original ideas in their content. Really, Google says that they are more and more interested in the quality of a page. This is probably true, because Google too has interest to deliver good quality content. But whatever it is the Google’s model to assess the quality of an article, it will eventually bring to a standardization of all the content on the Internet, because, being Google the dominant search engine, all the authors must aspire to fit its model. And the pages which do not fit the model, will be classified low in the search result page, so the users will generally be served with results of the same type. It means that the Internet risks to become the realm of conformism or at least that only conformist results are likely to be found.

There is another aspect which enforces this tendency to the conformism. You may also have a revolutionary idea, but if this is not expressed through the keywords that people search, it is not likely to be found. For example, this article has a few chances to be found, because the word Googlecracy has a very limited use and nobody searches it. It is a gamble: if this word will become more used in the future, I have the advantage to already be on the Net. But this conformism may also have more serious consequences. If Newton had put his work on the Internet, nobody would have discovered his theory trough a search engine, because obviously nobody could have the idea to search the word “gravity” (or gravitas, since he used Latin, if I am not mistaken). The only chance for him would have been to address to social sites.

In the same way, SEO experts recommend to avoid articles about too competitive keywords, i.e. the keywords which return million results. Million results means: no chances of being in the first page of Google results, then no traffic. But at the same time, you must avoid keywords that nobody searches. Also if you have a theory so great as the gravity force or the relativity, you have no chance to be found with keywords that are not searched. The Internet forces use to write only about the items that people search. Good bye, innovation.

Find search niches

Number of monthly searches

| Number of general results

| Number of "inanchor" websites

| Number of "intitle" websites

| |

|---|---|---|---|---|

"keyword"

| How much the keyword is searched (recommended > 1,000 < 3,000)

| How many results returned for the keywords (recommended < 1,000,000)

| How many pages have an anchor link to the keyword (recommended < 1,000)

| How many pages have the keyword in the title

|

A SEO technique to find search niches, i.e. keywords with a low competition and a reasonable number of monthly searches.

How Search Engines Affect the Users’ Experience

If you are a young author of great promise, you may want to deepen the SEO techniques before uploading your first article. So, you go on Google and anxiously you type the word SEO in the search bar. But what springs out would intimidate even the most willing apprentice. 200,000,000 results are by far an indigestible meal. Fortunately, you do not need to read all those pages, you just want to have an overview of the topic and some good ideas to promote your article. So, like everyone else, you begin from the top of the list. At the top there is, as always, the Wikipedia page which explains what SEO means and what SEO does. Good, but you still lack the most important information: some useful tips to improve your results. You read the page of the Google support. It provides you with some interesting additional information, but still nothing says you what to do to be a successful author. Some of the results are sites of professionals who offer their services, but you do not have a budget to spend, you must do only with your own forces. Now you understand that your search was too generic, you had to be more specific, for example to search something like “seo tips” (37,400,000 results) or even to hazard something more explicit: “how to write a successful article” (151,000,000 results). Now you have some cause for satisfaction, finally you have been able to get something useful to you.

You learned that to find the information you want you must guess what are the right keywords. However, you have still a doubt: 151.000.000 pages are a treasure that you will never be able to drag. Do you trust Google and believe that the best results are all in the first pages? Or perhaps, do you believe that the other million pages may contain at least some additional information and that there is a relevant waste of information?

Do you find what you search on the Internet?

How much are you satisfied with the results of your search?

After how many searches do you find what you are looking for?

How Should Search Engines Evolve?

This is the classic million dollars question. Nowadays, as I said, the searches are dominated by Google and the other search engines do not offer a significant alternative. The risk that we can find only what Google wants is concrete. However, the question is general. In practice the traditional techniques of search engines, with the results ordered in pages, allows only a very small portion of the documents related to a keyword coming to the light. This may be perfectly acceptable if I search the address of a restaurant or the birth date of Napoleon and less acceptable if I search the meaning of the Mona Lisa’s smile or tips to drive traffic to my articles.

The point is that it is virtually impossible to establish, among million and million pages, what is the best answer to a keyword. Using the links that point to the page (and now taking into account, as it seems, also the liking of social media) Google has introduced a “human factor” in the evaluation of the page. The best page is the page most liked. This is relevant, but it is not resolutive.

When I search a keyword, the search engine cannot know what exactly I expect to find. I may want to look for a “wikipedic” dissertation or perhaps to be interested in a personal vision, an opinion. To allow the users to better find what they look for, search engines should give the users more means to determine the results. The users should be able to master the research and to be free to establish which weight they want to give to the parameters. So, when I do a search, I would expect to be asked if I like to have first the institutional sites, or the personal sites, or sites with many links or with a great reputation on social networks or even to give space to randomness. This is contrary to the philosophy “I feel lucky”, the other “workhorse” of Google, that means “let me do it for you”. In the next generation of search engines the users, I think, should have the ability to determine the criteria for the ranking of the results, if they want to.