How To Improve The Quality Process Of Hubs

Introduction

This hub is inspired by the current controversy surrounding the "featured" and "un-featured hubs" statistics being made public by HubPages. This is indirectly related to the quality assessment process of HubPages. My contention is that the current rating process is deficient and could use some improvement. It unfairly places certain hubs in the "un-featured" catatgory causing distress among some hubbers. For the record, I do not think this stat should be made public. I do have some suggestions on how to improve the quality assessment process.

-November 2015

Background

To learn about the current quality assessment process, I read the link below which describe the current HubPages process. I must commend the staff at HubPages for creating HubPages in the first place and for creating a working platform for writers. The process as currently implemented is not bad but can be improved. Considering the limited staff, it is probably the best that can be done as a first trial. I do think it can be improved. Here is how I perceive this problem and solution.

What Would I do?

Based on the current information available, HubPages has a staff of only 23. There are approximately 55,000 hubbers and almost 800,000 hubs and more being generated every day. The problem is, how to insure quality of content across the whole spectrum of HubPages? and more importantly, with minimum human intervention.

After thinking about this for awhile, here is what I propose if I was given the task of designing a quality assessment process. Notice that many of this exist today in HubPages. My additions are the improvements that is recommended.

- Use an AI algorithm for automatic processing and checking

- Use feedback loop to incrementally improve the system

- Two phases of operation, initial assessment and a maintenance mode

- Provide feedback to authors to guide them

- Must include a small human component to assist the AI to learn

- Inject some randomness to the process (to simulate the real world)

Details On AI

Use of AI algorithms to validate the content. I envision 8 stages of checking, some are easy but some hard.

Stage 1 - Check the Title / URL for uniqueness and valid character set. (easy)

2 - Check the category selection to make sure it is the best suited.

3 - Check all text modules for proper spelling and mechanics of writing... (easy)

4 - Check the content for duplication with existing web pages (automated search)

5 - Validate all images for sufficient resolution and for being royalty free or original content.

6 - Check all links to make sure they are valid and not SPAM sites. (easy)

7 - Make sure the promo modules of Amazon are relevant to topic and within limit. (easy)

8 - Content of hub is "context relevant" to topic (hardest to do in my opinion)

After the initial process, a score can be generated from 1 to 100. The hubs will be placed in three initial bins; Featured score (70-100), Needs work (40-69), Rejected (0-39) for violations.

Feedback Loop

The feedback loop is a key component and it implies a time sequence. Data will be collected after the initial phase of assessment and it will be fed back to iterate the process on a regular basis perhaps weekly. Some items to be feed back may include, traffic count, human input (if any, to be discussed later) and a random element (explained later) and the google page rank.

The important of the feedback is to simulate the "learning" process of human beings. An AI system is only "intelligent" if it can learn by trial and error and try different solutions.

Another important feedback input should be from Google Page Rank. Once a hub is published and featured, the google web crawler should find it in a few days. It then assign a page rank to that hub and it is indexed in the google vast search database. No one really knows how the algorithm works and it is periodically updated to incrementally improve the search results. This "page rank" is an important data point along with actual traffic (page views) to determine the quality of the hub.

Two Phases

This Assessment process has two phases. The initial phase which determines the starting "score" and a maintenance phase where the "score" may rise or fall based on some time sensitive data such as viewer traffic. The data may include not only first access click but time spent on page etc. and whether there were any user comments and whether any sales were made as a result of viewing the page.

Let me address the problem with how to deal with stale pages. Currently, HubPages and other sites put a value on webpages that are updated on a regular basis. The implication being that a hub that is updated by the creator must have some improvements however small. That may be true for some hubs but for the majority of hubs that I create, require little updates. If they did, I would fix errors or broken links or occasional new updated information. It seems to me, the frequency of edits to any hub should not figure into the general quality assessment of that hub.

This is also directly related to the current debate of "featured" vs. "un-featured" hub stats. Some hubbers feel it is unfair to penalize a hub for being stale even though it gets great traffic and the content is excellent. This is especially true for people who are prolific and publish hundreds of hubs. Why should they be required to "update" their hubs periodically just to stay featured?

Feedback To Authors

The next point deals with providing meaningful feedback to hubbers so that they can provide better content. It should not be a guessing game as to why a hub is featured or not? The data is readily available as part of the automated processing. Provide this information to the hubber so that they know why their hub was rated such. For example, text too short, too many use of lists, poor image resolution, too many Amazon promo products etc.

Any information feedback provided to the hubber will lead to better quality hubs in the future and/or improving existing hubs.

A second important feedback to authors is the dashboard with important statistics on all the hubs that was created.The current HubPages dashboard is good but have some deficiencies.

First, the page view count per hub includes the visits of the author. This is a distortion of the number. Anytime the author visit the page either to update or to reply to comments, the number is counted in the total. The "page view count" should only include other readers that have clicked on the site.

Second, I believe a good quality metric is the google page rank and it should be included in the dashboard. I would use 50 as the upper limit. Anything greater than 50 belongs in the "no rank" category. A new column in the dashboard could list the rank number. This will help the hubber in choosing future hub titles that will produce higher ranking 1-10 being the goal. For lack of a better term, you can call this the SEO rank.

Human Input

Unfortunately or fortunately, AI has not reached the ultimate sophistication of replacing an experienced human editor. If it did, many HubPages staff will be out of a job. Currently, there is no AI system capable of replacing a human editor.

What I am suggesting is a minimal human input for a small number of random hubs. An experienced human editor can rate these sample hubs using the same criteria programmed into the AI system and provide them as guidelines to the AI system. A template if you will to help the system learn. This plays an important role in the feedback portion mentioned earlier. Part of intelligence is the ability to learn by trial and error and also by imitation. If one provide a few sample data, over time, the AI system will be able to detect a pattern that will "teach" it to recognize what is good writing.

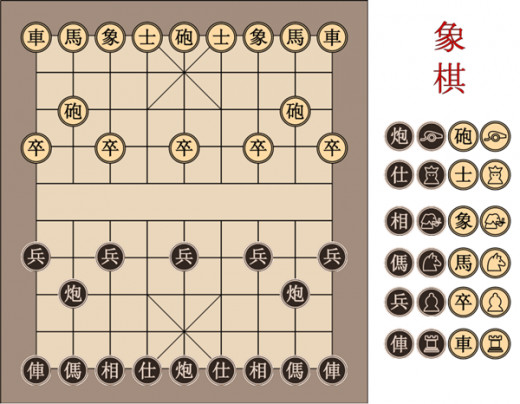

A Chinese Chess Board

Random Element

Lastly, the random element is also a key to designing a robust system. Let me give a short example to illustrate why it is needed.

Years ago, when the Palm Pilot was a hot technology gadget, I found a free game app that plays Chinese Chess. This is a game similar to traditional chess with a board and different pieces with rules on their moves. Chinese chess is easier than traditional chess because of the limited rules and can be mastered by almost anyone. When I started to play the game, it would beat me every time. I thought the designer did a pretty good job of implementing the winning algorithm. As I play more and more, I was able to detect it's "rule" base. On one occasion, I finally won the game. Unfortunately, that was when I realized that the designer had limited the algorithm to provide only one move. I was able to beat it every time after that. Needless to say, I stopped playing it after that.

What was the point of that story? The designer of the game did not provide a "random" element. In order to make the game more robust, it needs to be able to play different "equally viable moves" during the game. That way, it will insure that the same game is rarely repeated.

How does this apply to the QC process of HubPages? Just like the game I just described, when rating a hub, there needs to be a random element, however small, to the algorithm. After all, even two experienced human editors will produce different ratings on the same hub. That is because we are dealing with a subjective assessment of a page. There is no exact correct answer. In fact, one way to test the AI system is to provide it with the exact page with one small insignificant change. If it can produce a small difference in the assessment score, that is a good sign.

Current Limitation of AI

I've written about the limit of Artificial Intelligence in other hubs. Let me summarize what I think is still work in progress.

1. In the image section, AI is very poor at finding relevance to the subject matter. A human can view an image and within seconds determine it's "quality" and relevance to the subject matter. That is human intelligence. It can detect if the image is upside down or reversed or "wrong" based on prior knowledge. It can detect subtle features such as a carton being a satire or a caricature. It can spot imperfections due to processing or intentional deceit. All of these are very hard for an AI system to do not to mention the vast computing processing required. By the way, this problem is not solved by ever faster computers. More computing power does not lead to smarter solutions and in some cases any solution.

2. AI is also poor at identifying relevance of text. It can be easy to detect poor grammar or incomplete sentences but it can't determine if the text is relevant to the main topic. Also, it can't determine how artistic or creative the text is and how it would be perceived by a human reader. This is very easy to verify. I can come up with a paragraph of text that is total gibberish which a human editor would recognize in two seconds while an AI system may pass as "good." On the other hand, a poet can write a novel poem that is unique and a human editor would rate as great while an AI system may reject as "poor."

That's the good news for all experienced editors. Your jobs are safe for now. The Turing test was designed years ago to detect AI. I think we are still far from that despite the claims of many intelligent people. Time will tell. AI can be a great tool to help us but it can't replace us.

Summary

I must summarize by repeating my original comment. I think the current quality assessment process is good but needs improvement. Ideally, a human editor would be best to review each hub. Due to the lack of resources, AI is a good alternative but it is not perfect and it needs work. HubPages can do a lot to help all hubbers improve the quality of the content. This will eventually help all of us. Our goal is the same, to become more successful than the next. Any tools or assistance or feedback that will help should be implemented and welcomed.

Privacy is the most important factor. Many of the statistics are good for the hubbers but not to be made public. They have little meaning to the casual reader and should be kept private.

Thanks for reading my humble opinion.

Current Description Of HubPages Quality Process

- Featured Hubs and the Quality Assessment Process

The Quality Assessment Process determines which of these Hubs end up being showcased on Hubs and Topic Pages and made available to search engines. These Hubs are known as Featured Hubs.