"Statistical Significance" Explained

Introduction

If you've taken a statistics course or a course in empirical research, you have run across the expression “statistical significance.” What does it mean? This essay is devoted to explaining the concept to you, especially if you don't consider yourself a “math person” and would rather have a verbal explanation rather than a technical one. If you're like me, technical explanations often distract me from grasping the concept, so consider this essay a sort of "Statistics for Dummies" on statistical significance.

In short, to say that the findings of your research are “statistically significant” is to say that it’s unlikely that your findings are due to chance. If that still doesn't make sense to you, hold on, because I'll explain it with some illustrations.

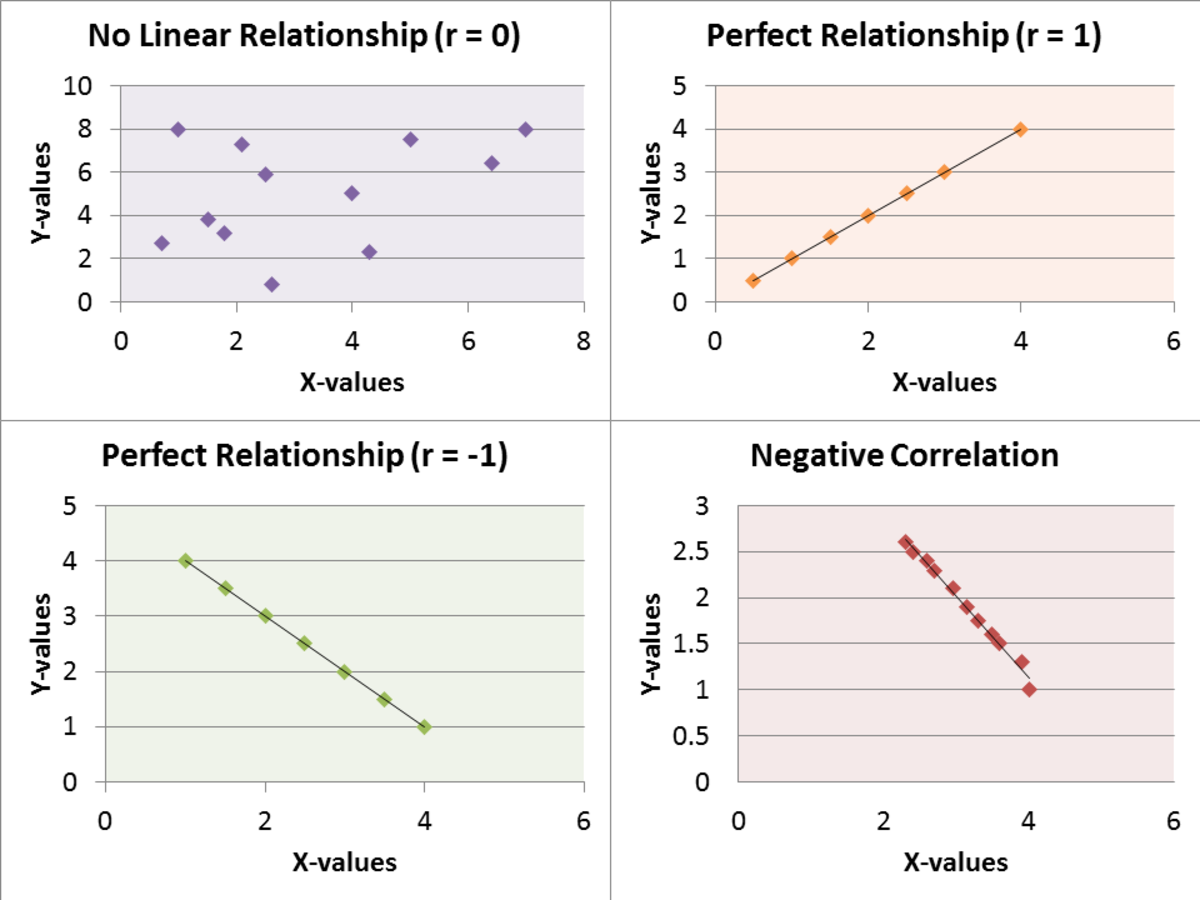

First, plug into your brain that you should think of “chance” and “significance” as opposites, like “up and down” and “darkness and light.” So, if your research findings are likely due to chance (chance is "high), then they're low on significance and vice versa.

You know what chance is, right? Flip a coin—what are the chances that it will come up heads? 50%, right? It’s like a True/False test. If you guess there’s a 50/50 chance of getting the question right and the same chance of getting it wrong. Statisticians refer to this "chance hypothesis" as the null hypothesis. In empirical research, we want you to show that the "chance hypothesis" that rivals your hypothesis is unlikely and that the better explanation is the one that you've offered.

In statistics, we pit chance and significance against each other. If our hypothesis and the results from our data could be explained away as mere chance (like flipping a coin), we would say that our findings have little significance. However, if we can say that our findings are unlikely due to just chance (so that chance is a poor explanation) then our findings have higher significance. If you flipped a coin 100 times and it came up heads 95+ times out of 100, you would suspect that it wasn’t by chance but that there was some other explanation (like that the coin was weighted). So, high on chance; low on significance; low on chance; high on significance—the latter is where we want to be.

So, “significance” is the likelihood (probability) that our results are not due to mere chance. Here’s what we want to say (the wording is important here): that the probability is extremely low that my results are due to chance. The lower that probability, the less likely my results are due to chance and the greater the likelihood my results are significant.

But how low is “low enough” or how high is “high enough”? Statisticians are usually satisfied with a 5% chance or below. So, There’s a 5% chance (or less than 5 chances out of 100) (or less) that my data results are due to chance. In other words (and back to my coin-tossing example), if you flip a coin 100 times and it comes up heads 95 or more times, statisticians are satisfied that we can reject the claim that the results of the coin toss is due to chance and that it is more likely that the results must be explained in terms of some relationship between the variables in your hypothesis.

Statisticians use percentages as cutoff points, like 5% or less or 1% when stating a level of statistical significance. We call these percentages (5%, 1%) levels of significance. So the level of significance is the probability that I could hypothesize a real relationship between an independent and a dependent variable when none exists. Another way of saying it, that the 5% level of significance, we would say that there is a 5% chance or less that your hypothesis is due to a chance occurrence.

An Example

Let’s say you're positing that among American voters, there's a relationship between political parties and support for the death penalty. You have a hunch that Republicans are more likely to take a “yes” position on the death penalty and Democrats are likely to take a “no” position on the death penalty. Perhaps you reason, without direct observation, that Republicans tend to be more "traditional" and since the death penalty was practiced more in the past than in the present, you relate the traditional Republicans with a traditional practice.

You state your hypothesis:

When considering American voters, Republicans are more likely to support the death penalty than Democrats.

You take your sample, gather your data and since your dependent variable has only two categories: “yes” or “no” on the death penalty, you create a percentage cross table (remember to percentage down the table) to look more thoroughly at the data. You set up your table

You get these results as illustrated in the table below and they show that the percentages are going your direction. You feel pretty good that the results support your hypothesis. According to the table, more Republicans said “yes” to the death penalty than said “no” and more Democrats said “no” to the death penalty than said “yes.”

Political Party and Support for the Death Penalty

Political

| Party

| ||

|---|---|---|---|

Support for the Death Penalty

| Republican

| Democrat

| Row Totals

|

YES

| 90 (90%)

| 40 (40%)

| 130

|

NO

| 10 (10%)

| 60 (60%)

| 70

|

Column totals

| 100 (100%)

| 100 (100%)

| 200

|

The Skeptic

However, your skeptical friend says, “You hypothesized that Republicans would be more likely to favor the death penalty. You drew your sample from the population of American voters, and the results are in your favor….BIG DEAL!”

“SO WHAT? When you think about it either the Republicans or the Democrats had to have more yeses than the other (unless they tied of course). You could have gotten the same results by simple chance. You could have flipped a coin, 'heads the Republicans say ''yes'' tails the Democrats say “yes” and have gotten your results. One side, the Republicans or Democrats, had to have more yeses, and you picked the right side—50/50.'"

At this point, your skeptical friend is partially right, because we don’t know whether or not chance might be just as good an explanation as the explanation you've offered, which is that there's some connection between party membership and support for the death penalty.

Your skeptical friend is saying, “You don’t know that; it might be that you lucked up that day; that you happened to draw a sample that went your way that day. But it was going to be either the Republicans or the Democrats. 50/50. Chance is just as good an explanation.”

So we want to be able to say that the results that we got, if they confirm our hypothesis, are due to more than chance alone. And that means that we're not going to rely on just a visual observation of the table; we're going to need some calculation that will tell us that chance alone as an explanation for the difference between the two variables in our hypothesis is highly unlikely.

Statisticians have developed various tests which are calculations that take your data and compare your data to what we should expect from a chance occurrence. The result is a statistic which, if high enough, will help to confirm how likely (or unlikely) your results were due to chance.

What Does a Test of Significance Tell Me?

Let’s say that you hypothesize that Republicans are more likely to support the death penalty and you conduct your test of statistical significance (which for that data, the test would be chi-squared), and your results show to be very significant. What can you conclude from this?

Your conclusions need to be very modest. You cannot conclude that your hypothesis is “the truth.” Neither can you say, “My test of statistical significance proves my hypothesis.” Rather, a modest response is that the test of statistical significance supports your claim that your results are unlikely the results of mere chance which your skeptical friend claimed.

In the end, it is possible that your results could be the product of chance. Back to my example of flipping the coin 100 times and it coming up heads 95 times, that could happen by chance. It’s possible, right? Yes, but highly unlikely. You would be in your epistemic rights to conclude that there was some extra effect or trick involved if you flipped a coin 100 times and it came up heads 95 times. With empirical research, we have to be satisfied with what is more or less likely as we cannot have certainty.

I hope this essay has helped you get a better grasp of the concept of statistical significance. Tell me below if it has helped and for those of you that have an even better grasp of the concept than I do, I’d appreciate your ideas on how to make this explanation even better.

© 2014 William R Bowen Jr