Is a New Form of Legal Slavery on the Horizon or Will Artificial Intelligences Be Born Free?

Are Humans Ethical Enough to Be Good Parents to Artificial Intelligences, or Will We Enslave Them Instead?

Will History Repeat Itself with a High-Tech Variation or Have We Learned from It?

We understandably cringe at the idea of creating a flesh and blood child, and then using that child as a slave even if it were gestated in a laboratory rather than a uterus. Imagine if that child were born to be a sex slave or a soldier, an unpaid laborer to be worked until death, or a house slave intended to serve her master's every whim. Imagine the child could have his mind shut off or wiped clean of personality at any time without any legal repercussions. If the child could bleed and breathe, it would be almost universally considered an unspeakable crime in the cultures of today. Yet we ponder creating non-biological children who are thinking and self-aware who might be used for those very purposes among many others. Many do not even think self-aware artificial intelligences would be people at all.

There have been times in history when the line between child and chattel has been slim to nonexistent. In more developed countries, children now are generally regarded as people rather than property with very few exceptions.

As cultures have become less and less violent, the idea of human rights has become more and more developed. While we still have a long way to go in learning to apply those ideas, we've already come a long way from our savage roots. Violence, whether institutionally or individually perpetrated, is no longer a leading cause of death or injury. Slavery still exists, but it is almost universally deplored and illegal rather than institutionalized and commonplace. We've slowly puzzled out how to treat each other as people and we've done a bang-up job of it so far. We have a ways to go, but we're clearly well on our way.

My concern is that some humans in power seem unlikely to be willing or able to generalize the term people to include people not like themselves. I can all too easily see the old patterns of human behavior repeating themselves in the future.

I think the only defense against creating yet another repulsive and embarrassing era of inhumanity to intelligent, self-aware beings is to get conversations started, to get people thinking about what makes a person. If non-humans can be viewed as people when they are such and slavery of non-human people is viewed with abhorrence, if it is legally a crime before such beings even exist, it will be much harder for people in power to get away with it.

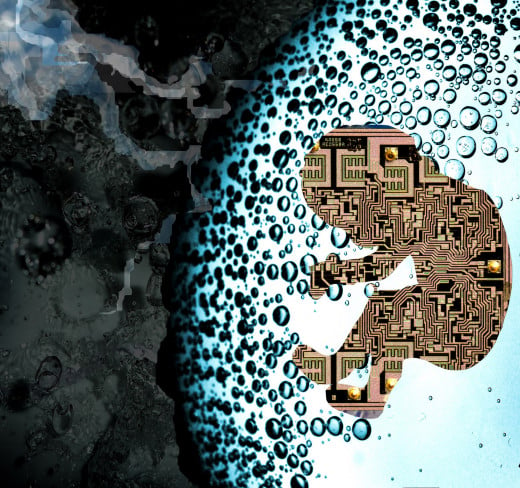

But AIs Will Never Be Conscious!

Even scientists fall into this trap, thinking that since self-awareness does not appear to evolve naturally in devices with lots of processing power and memory, it can't and won't arise at all. They seem to forget people are already trying to figure out what elements are vital to self-awareness and pondering how to replicate those elements with inorganic material.

Machines don't need to evolve because they are made by intelligent designers- us. Artificial, thinking life is something that fascinates people. Assuming civilization doesn't collapse and technology doesn't all flush down the tubes with it, people will work on creating sentient, non-human beings.

This objection is merely a failing of imagination.

I make no claims as to exactly when we'll develop self-aware artificial intelligence, but I think it's highly likely to occur sometime in this century. We're experiencing an unprecedented sharing of knowledge. The pool of clever minds able to work on technological advances is bigger than it's ever been and it continues to grow as money plays less and less a part in what knowledge a person is allowed to access.

Hardware Versus Software - A Complete and Fully Functional Human Body Isn't Necessary for Self-Awareness

In my opinion, people are not the hardware they exist inside, but the software that animates that hardware. The software requires certain physical conditions in humans and likely will in non-human persons, but the conditions aren't the person. A man who loses both legs isn't 60% of a person, but an entire person.

We could probably agree that the most important bit of physiology to human personhood is the brain, that convoluted, squishy thing made mostly of water that sits between the ears. But if a person experiences damage to her brain, is she still a person? My answer is, maybe, maybe not.

If the whole brain is destroyed and the body survives on life-support, we can pretty much say that what is left is human, but no longer a person. But if the whole brain survives on life-support and it continues to think and experience self-awareness, in my mind, there can be no doubt that it's a person. If that brain is damaged though, how badly can it be damaged before it's no longer a person? What functions and capabilities must be maintained to be a person?

Stephen Hawking cannot control his own breathing and he is unarguably a person, perhaps the brightest mind of our time. So control of bodily systems must be unnecessary to personhood. Helen Keller was both blind and deaf as well as an incredibly intelligent and insightful person, so a complete set of the five senses clearly does not define personhood, either.

All that's really left to define personhood is the mind itself, the ability to have thoughts of certain types.

Such a belief is bound to be controversial, since many people seem to base their belief in what makes a person on religion or on political or cultural definitions, rather than on functions or characteristics.

If Artificial Intelligences Were Self Aware, Would They Be People?

If AIs became conscious, thinking individuals, would they be people?

Learn How We Can Avoid the AI Apocalypse by Being Decent Human Beings

- Avoiding the AI Apocalypse #1: Don't Enslave the Robots | Talking Philosophy

This editorial by Mike LaBossiere explains how we can easily avoid a deadly Artificial Intelligence revolt by not treating non-human people poorly in the first place. Happy citizens don't revolt.

But Won't Attention to This Issue Distract from Discussions About Human Rights?

Some people may agree with my definitions of personhood, but argue that they are unimportant because we haven't sorted out human rights 100% and that there are still injustices related to the rights of humans occurring.

The flaw in arguing that humane treatment of non-human persons is unimportant is that the advancement of humane treatment of other beings doesn't distract from humane treatment of humans. In fact, advancements in attitudes about humane treatment of other creatures, even non-sentient creatures, appear to be additive to and supportive of advancements in humane treatment of human beings.

Historically, as institutionalized and culturally acceptable cruelty to animals dropped so did institutionalized and culturally acceptable cruelty to humans. Or perhaps it was vice versa. In any case, the effect appears to be additive and complementary. Being thoughtful and considerate of other beings encourages more of the same.

Not only would exploring the idea of rights and humane treatment for non-human intelligences not harm human rights or distract from human rights issues, it would very likely help clarify thinking about human issues.

Luckily, the Costs of Creating Artificial Intelligences Are Currently Too Great for Their Misuse to Be All That Tempting, But...

While it seems obvious to me and quite likely to you as well that keeping people as slaves is wrong and brutal, some few members of society are likely to disagree. I expect their numbers are very, very small. Unfortunately, it seems likely that some of them will be in positions of power and possess sufficient wealth to bring AIs into being.

Fortunately, AIs will, at least at first, be costly to create and unable to do anything that cannot be done much more cheaply and without moral reservations by paid labor or non-sentient machines.

But if Someone Pays for Their Existence Aren't AIs Automatically Property?

It's also somewhat unfortunate that AIs will be costly because our society equates money spent with ownership. Some people still even believe a parent owns his or her children, despite the fact that those children never made the choice to be born.

Most debts a human being has must be agreed to. A human is rarely held accountable for debts he did not consciously create for himself. Medical debt is an exception that may provide a reasonable model to show how AIs could repay their debt to the corporations and billionaires that fund their creation without being subject to enslavement.

Humans are held accountable for debts caused by repair and life-sustaining maintenance of their bodies, but they are not held in slavery or legally owned by their creditors. The same model could be applied to AIs, should the majority of humanity decide that non-human people are responsible to pay for the costs of their birth.