Tips Maintenance Statistics - Maintenance Cruz

Data linked

What are statistics? Well, truly, they are anything we study and note for a duration of time. This could be as simplistic as counting to yourself as a machine cycles to determine an intermittent timing issue. It can be as involved as constant data capture and charting. This leads into the main focus of this article, what data do you really need and how much?

It is very common to find that useless statistics are being gathered and that they really never go anywhere. You find this in many companies and you ask yourself, why? The reasons vary but, I find the most common reason is that the data to be collected was never examined thoroughly before the capture began. Another is that at one point the data was important for process or just for someone but, is no longer relevant and just has never been edited from the data stream.

Collecting, storing, and sorting useless data is amazing to me. Unfortunately for me, I am one of those people that asks those questions. "What do you use that chart for?" "You know, I'm not sure, I have just been printing it off and including it with my report for years." Further down the line you find out that the chart eventually heads to the paper shredder with no value coming out of it.

Data that gives you false or influenced feedback are worse, because they lead to incorrect actions. The report shows that there are many hours of downtime pointing at one machine. You investigate the data first and find that the reporting is flawed and that anything that goes wrong in that general area is input as your culprit machine. The truth is that only a fraction of that data was actually attributable to the machine and more specific reporting is needed to segregate the processes properly. The reporting personnel generally do not know or do not necessarily care, they reported the downtime, job done.

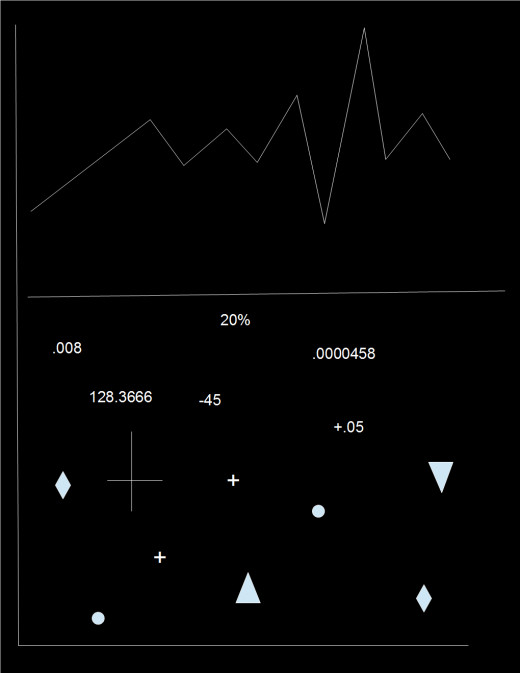

Even data collected through sensor feedback and data collection can be very misleading. A sensor is in middle portion of a process pipe work. The sensor shows many spikes and looks, in general , that the process is in poor control. Upon deeper investigation it is learned that the spikes are a normal part of the system dynamics and the surges are arrested further down the system. Distance, time, volume, velocity, sensor position, and many other factors could be skewing the data.

Resolution

Resolution is the best word to describe data analysis. You have to ask how much information do we really need? You can look at feedback loops in millisecond intervals, tenths of seconds, minutes, or other. It is very easy to gather way more information than you really need, to the point that the data becomes hard to obtain meaningful analysis. Of course there definitely are processes that require very tight control and data monitoring.

It requires thoughtful consideration and engineering input to completely understand the resolution that you need. It may seam a good idea to gather everything you can and sort it out later. Unless you are specifically trying to identify an anomaly or intermittent condition, chances are that much of the data you are saving is pretty much useless.

Ask yourself, what happens if we look at this per minute rather than per second, do we lose or grasp on the process variable? If your system is engineered well and has a strong history of good performance then it is probably not gaining you anything by capturing every data point every tenth of a second. In the event that the process starts to show signs of a control problem, then set the capture times to short intervals to identify the issue. The longer duration capture rate showed the process doing something strange and that keys you to examine the data at a higher resolution for troubleshooting.

There is the argument that the data can be gathered at troubleshooting rates all the time and then the data is already captured for troubleshooting. This is definitely okay, if you have the storage for this much data but, how often will you needed it. It is directly related to how well your equipment and process runs, this determines the data needs. If your system regularly needs fine tuning then the highest data capture rates are justified. If your system churns out product daily with very few issues then data capture resolution can be relaxed quite a bit.

Do You Even Use It?

The title speaks for itself, do you even use the data? What if you found out that most of the reported data is never used, by anyone? Would it surprise you? Most the people I have asked this question answer "No, I'm would not be surprised that no one use this information" or " I know we do not do anything with the data and I have always wondered why we write it down".

If the absolutely necessary information was collected and the useless information ceased to be captured, how much time could be saved? Data that is already being captured automatically is also being manually input on a run sheet, why? Twenty sensor signals are being captured but, only five are relevant. Quantify your needs and streamline the data so it directly points out the real information that actually can be used to improve.

The meeting is full of charts and if you ask anyone at the end if they remember them, they don't. Quantify the information you share so it easily describes the important information that can be used. It is counter productive to put up a slew of charts that describe too many variables, who is really going to get it. Jagged line charts showing that last year we were a point higher, the next chart shows a point lower on a totally unrelated data point. This is not to say that charts are out, quit the contrary, use charts for graphic depiction of quantified data. Data that addresses the heart of a problem or quantified to just look at a specific data set only. Fully discuss the data at hand and remove it before moving to other information, you don't want people looking at the wrong chart while you give your presentation.

Pick The Big Hitter

Choose the data to be examined carefully. Choose the data that can improve your process the most, first. Seams like a "no brain'er" but, a lot of insignificant information is poured over everday while the elephant in the room is ignored.

You probably will only need a split second to come up with your "big hitter". It seams every process has one particular machine or process that causes the most grief, examine data on that subject first. That data may just relieve a constant headache and make a significant improvement. Maybe you have several "big hitter" category issues, fine, sort them by worst to least and analyze in turn. Yes, you can look at all of them and make plans to fix all of them but, analyze one and make a plan then on to the next.

If the data doesn't readily expose the flaw in your system evaluate if you are collecting the right data. You may need more data or a different type of data.

Example; the machine intermittently jams up. The data does shows the exact occurrences but, does not reveal a reason for the jam ups. You data capture your control processor to look at inputs and outputs but, all seems to be working okay. You place a video camera to record the machine in operation. The data shows that there was a jam at 1:37:15 pm. You now can view the machine in operation at that exact time, be sure that the clocks are synchronized.

You can realize at this point that all the other data you were capturing was useless until you started to capture the data that would actually bring the real problem into view. The issue could be pinned down by using two data types in co-operation only. This should be a lesson for the next issue, maybe there are other ways of looking at the problem that have not been employed yet. Maybe, all the data you have been looking at that has done nothing to correct the issue is totally or partially useless.

Summary

We need data and we use it daily. Our companies rely on data to insure they are profitable. We use data to ensure we are able to live our lives without going too far into debt. Ensuring that the data you are looking at is the right data, that is the key. Streamlining your data so you are capturing the important information and stop collecting the useless. Allow your automatic capture to collect the data and relieve your operators or supervisors of the burden of logging the same data day after day.

Take a look at your present situation and evaluate if the information you log is doing any good. Follow this information to it's end and find out if anyone is actually using it. Are those data sheet just getting filed away and taking up space for no apparent reason?