- HubPages»

- Technology»

- Internet & the Web»

- Viruses, Spyware & Internet Security

Understanding Random Forest Classifier and How It Works

Random Forest Classifier is model used to eliminate noise in the model data input. The model has the ability to find out spurious relationship between the inputs and the chosen target variable when a list of variables and dataset is keyed to it. At some point the result may be over fitting and the model may not be in a position to substantially recognize future input.

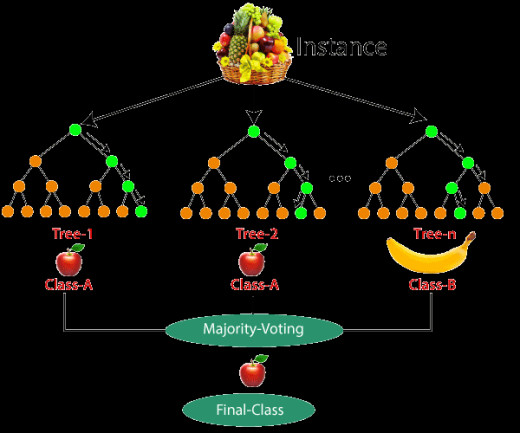

The model inherently contains underlying decision trees that are in a position to omit the noise generating variable or characteristic by building many trees using a subset of the input variables in place and their values. The model then takes a vote among all the trees in place at its time of generating a prediction where a majority prediction value carries the day. Each of the decision trees is constructed on a bootstrapped sample from the original training data. In classifying a particular object from an input vector, the input vector has to be attached to each of the forest trees. Each one of the trees votes out the decision concerning the category of the objects. The forest then decides to choose the classification with the majority of votes from all the forest tress.

Every tree in the forest is developed as follows:

a) We can assume the number of examples in the original training data to be P. You will then need to draw a boostrap sample of size P from the training data. This sample will be a new training data set on which the new tree is grown. Data available in the training data but omitted in the bootstrap data are referred as out-of bag-data.

b) We can assume the total number of input features availed at the original training data to be S. Only P attributes are selected at random on this bootstrap random data where s<S. From this particular set, the attributes generates the most efficient split at every node of the tree. The s value ought to be even while the forest is growing.

The strength or accuracy of each tree alongside the relation of the trees in the forest spells out the rate of error for the forest. Despite the fact that raising the correlation has a tendency of increasing the error rate of the forest, this error rate can be decreased by raising the accuracy level of every tree in the forest. Both correlation and strength are considered as being depended on s. Therefore, reducing s leads to a decline in both strength and correlation.