The History of Computer Technology

The picture to the right, do you know what those are? Do you remember using those back in the 1970s - 1980s? I remember both those disks. My kids see them and have no clue how it is even possible that computers used such a thing. Did you know that the 8 inch floppy was first used in 1971 and could only hold 79.7kB of storage??? The 3 1/2 inch floppy was better when it was released in 1982 at 200kB of storage and in 1987 it could actually hold up to 10MB. Why is this so crazy? Well, after looking on my computer, my pictures I take with my camera range from 1-5 MB per picture! I couldn't have put one picture on those floppy discs!!! My Word and Excel documents are a lot smaller, ranging from 14kB - 130kB so at least one of those would have fit, but I really could not imagine my life as it is today without the computer storage capacity that I have. I often think I would DIE without my computer LOL

So where did computer technology start? Well, there are a lot of ideas out there on when exactly it started but by definition, a computer is any device capable of performing mathematical equations or calculations, therefore the first devices date back to at least 300 BCE with the abacus, the calculating clock in the 1620's and the slide rule in the 1630's.

The Calculating Clock was the first machine that worked like a computer that was gear-powered. It was created by Wilhelm Schickard in 1623 and it operated by pulling or pushing rods set inside a glass case.

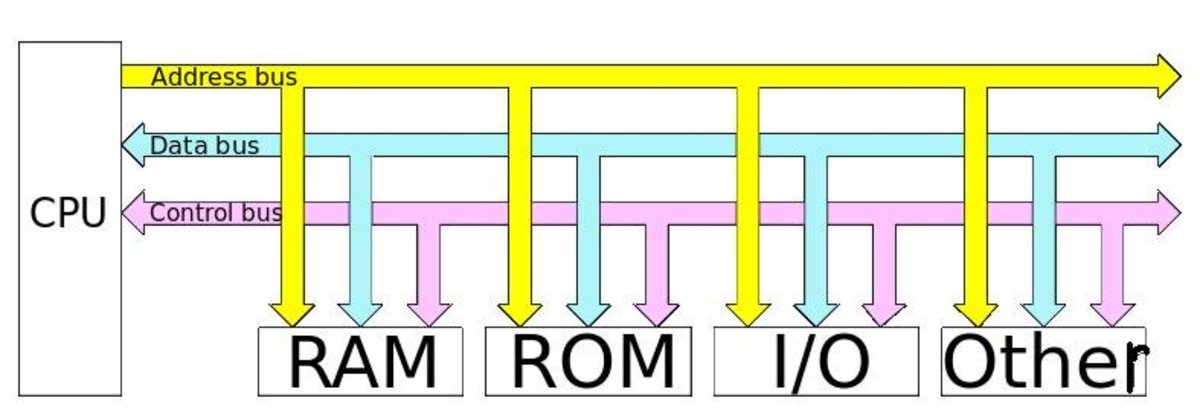

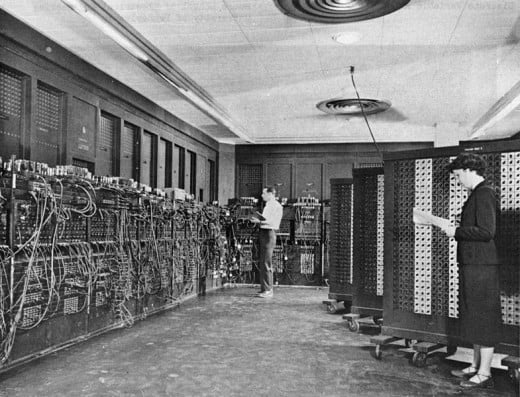

The First Generation computers were from the years 1940-1956. These computers used vacuum tubes for circuitry and magnetic drums for memory. They were often enormous, taking up entire rooms, very expensive to operate and generated a lot of heat, which often caused malfunctions (something that still happens to computers today). These computers relied on the lowest level of programming language and they could only solve one problem at a time.

The ENIAC (Electronic Numerical Integrator and Calculator) computer was built between 1943 and 1945. It was so large that it spanned many rooms and used nearly 20,000 vacuum tubes to run it.

The Second Generation computers were from the years 1956-1963. The transistors replaced the vacuum tubes which allowed computers to become smaller, faster, cheaper, more energy-efficient and more reliable then the first generation computers. These machines moved from the cryptic binary machine language to the symbolic languages which allowed programmers to specify instructions in words rather then in numbers. These computers were also the first ones to store their instructions in memory, which changed from the magnetic drums in the first generations to the magnetic core technology.

The Third Generation computers came from the years 1964-1971. The hallmark of this third generation was the development of the integrated circuit. The transistors were miniaturized and placed on semiconductors (silicon chips), which drastically increased the speed and efficiency of the computers.

The third generations computers started using keyboards and monitors and interfaced with an operating system which allowed them to run multiple applications at one time. It wasn't until this generation that computers became available to a mass audience because they were smaller and cheaper then their predecessors.

The Fourth Generation computers started using microprocessors in 1971. A microprocessor is a chip that basically contains an entire computer (a 1940s era one) using an integrated circuit. The first microprocessor was made by Intel in 1971, called the Intel 4004 chip, and with this technology the home computer became a possibility. Intel is still, to this day, the main company for microprocessor chips.

In 1975 the first personal computer for home use was the Altair 8800. (pictured below) This computer contained an Intel 8800 microprocessor and it had to be assembled by the person who bought it (could you imagine getting the instruction book for that one LOL). Today the Altair is recognized as being the spark that lead to the microcomputer revolution.

1980 was a big year for Microsoft as this was the year that the first operating system was publicly released in a variant of Unix. The Unix variant would become home to the first verson of Microsoft's word processor, Microsoft Word, which was originally titled "Multi-Tool Word". It became notable for its concept of "What you see is what you get".

In 1981 IBM introduced it's first PC (personal computer) with model number 5150.

In 1984 Apple introduced the Macintosh.

All these small computers became more powerful and could be linked together to form networks. These networks eventually led to the development of the Internet.

The fourth generation computers also saw the development of the GUIs (graphical user interface), the mouse and many different handheld devices.

In 1990 Microsoft launched Windows 3.0 which had new features such as streamlined GUIs and improved protected mode ability for the Intel 386 processor. It sold over 100,000 copies in two weeks! And by 1993 Windows had become the most widely used GUI operating system in the world,

Fifth Generation computers are considered those with Artificial Intelligence. It will be everything from the present and beyond. Most of these are still in development, though there are some applications, such as voice recognition, that are being used today. The thing that will change the face of computers in years to come are the quantum computation and molecular and nanotechnology. The goal of the fifth generation computing is to develop devices that respond to natural language input and are capable of learning and self organizing.