The REAL Ultron: Robots Are Taking Over!

We always fear technology

Ever since we learned to build machines, we have wondered if they will rebel. Will they kill us in our sleep? Or, if they could be taught to really think like humans, enslaving humanity… and then killing us. Thinking machines have only existing for about a hundred years, when we entered the age of electricity. Just a few years into the electrical age, a play was written that considered the possibility of the machines rebelling. This play, which premiered in Czechoslovakia 1921, used a new word… Robot (using an old term, “Robota”, mandatory and unpaid work performed by a serf for the lord of the manor). Early on in the play, the artificial people in the story started acting like real people and … yeah… killed all the humans.

The latest Avengers movie, “Age of Ultron” will follow in the footsteps of the rest of the Marvel franchises, and have record ticket sales. True, it’s another thrill a minute action adventure, but underneath all of the explosions and action shots there is a troubling message. We are increasingly worried about that technology is getting out of control.

Just after World War II, the world was fearful that nuclear power would get out of control and might doom humanity. It hasn’t happened so far, but it still might. The new fear is that computer technology is moving from simple artificial intelligence, like search engines and Siri-type personal assistants, to more capable A.I. systems that will first mimic human behavior and then surpass human intelligence. Add this to the arrival of drones and robots, hardware that can interact with us… and kill us, and we have real reasons to be afraid of an Ultron future!

The idea of an all-powerful artificial intelligence is nothing new, certainly not in the movies. In the Terminator series, we see intelligent robots (Terminators), but the super powerful intelligence is a computer system called “Skynet”, which launches a nuclear war against humanity. Certainly we don’t want a future like that! Which is why it’s all the more ironic that the group that Edward Snowden stole information from, the National Security Agency (NSA), named one of its spy system, Skynet. Snowden tells us that he leaked information because the NSA was not taking its work seriously. But the NSA can refute this by showing systems like Skynet that are named for a fictitious computer system that turns into a weapon of murder, whereas the NSA’s Skynet was created to sift through phone data to find high value targets to imprison or assassinate. Hmmm… maybe spy agencies are better off without a sense of humor.

At the start of latest Iraqi war, we used remote control devices. These looked like robots, relatively small machines (10 to 200 lbs) that moved around on the ground, and larger devices that flew in the air. Some of the ground devices had weapons, but they were completely remote control… at all times they were being controlled by an operator in a remote location. Often, that location was just a few feet away, while a device looked for a bomb or tried to look into a room with hostages.

Flying devices with a range or hundreds of miles, needs some autonomy, at least enough intelligence to know if it should complete a mission or return home if the signal from the remote operator is lost. From that humble start, drones are now being built that could be dropped off in the dessert to autonomously patrol (recharging on solar power), report back on terrorist activities (number in the area, communications traffic, etc.) and only call back when it needs assistance (“I think I should kill this guy. Can I kill this guy?”).

Can robots understand ethics… and kill us?

The trailer for “Age of Ultron” plays a haunting version of “There are no Strings on Me”, from “Pinocchio”. This song is a reminder that the true danger behind Ultron is his free will. He can do anything (hint: “Kill the humans”). Some of the most brilliant thinkers today… Steve Hawkins, Elon Musk and Bill Gates… are warning us that the unrestricted growth of artificial intelligence could doom humanity.

Another brilliant thinker and writer, Isaac Asimov, wrote 300 books, many exploring the subjects of Science, Science Fiction and Robots. Asimov is famous for creating the “Laws of Robotics”. Basically, robots must protect themselves, yet they must still obey any order they are given (orders trump self-protection). Above all else, robots must protect humans from harm (which trumps other orders or self-protection).

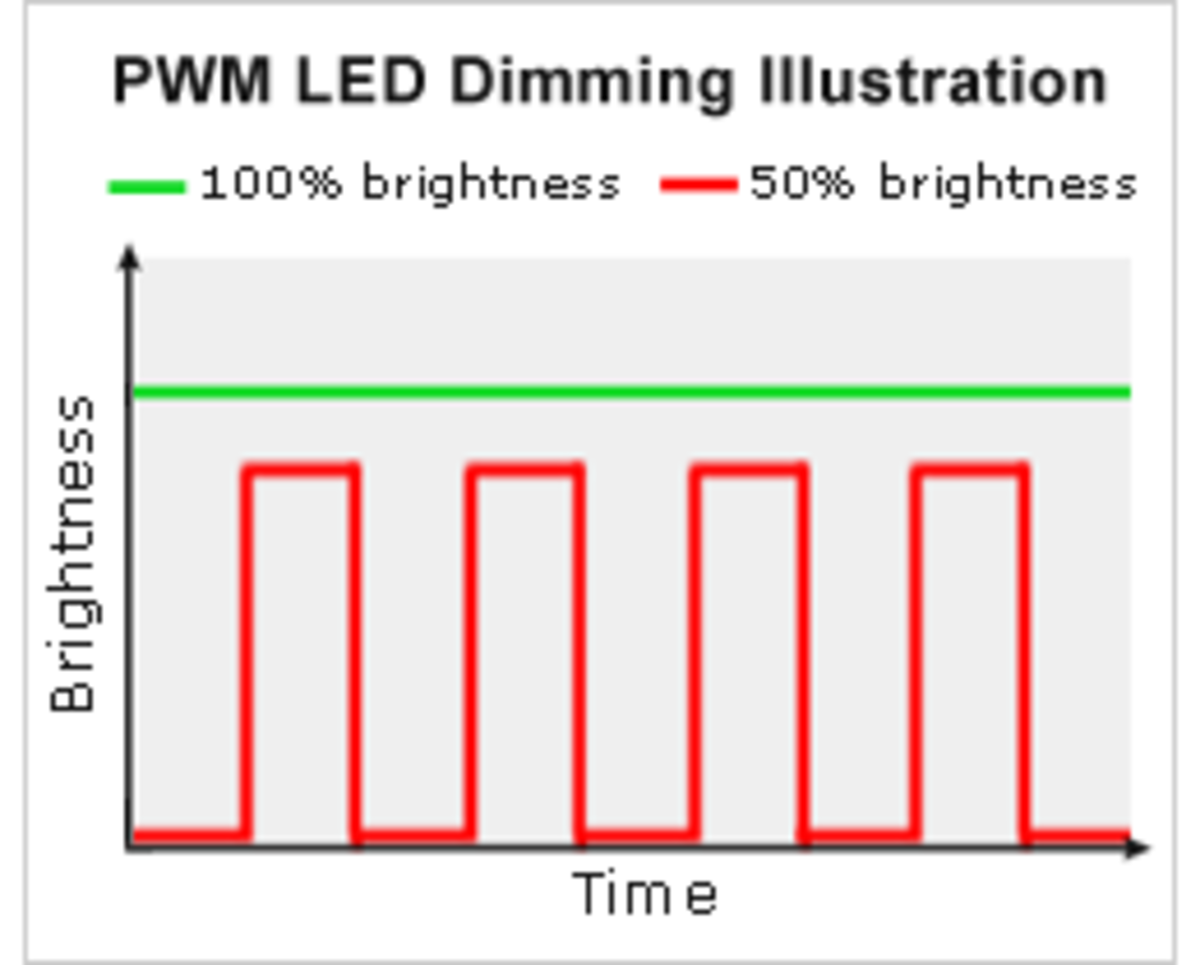

That’s a pretty good set of rules. But do they work in the real world? Robotic vacuum cleaners have been around for a while, but their intelligence focuses on finding dirt, not making life and death decisions. When cars that drive themselves become available for sale, life and death decisions will happen all the time. Once every car has an A.I., we can expect the 35,000 car related deaths and 1,000,000 car collisions that happen every year to largely go away. Cars will communicate with each other and prevent stupid mistakes. But in the decades it takes for robotic cars to take over, robots will need to contend with human drivers who can be illogical, impatient and be sleepy, drunk or distracted. During this period of transition, robots can reduce the number of accidents, but can they eliminate them?

Imagine that a car has a driver and 3 passengers, and all three passengers are children. The driver has had too much to drink, and makes an illegal lane change at very high speed, setting you up for a potentially fatal accident. Your robot driver instantly analyses the situation and identifies the only three options.

- Option one is for your robot to make a sharp turn; this saves your life, but allows the drunk driver to hit yet another car, killing all four passengers plus two more passengers in the other vehicle. You live, but six other people die. If a robot’s primary responsibility is protecting the lives of humans, can it accept this option?

- Option two is to heroically “tap” the drunk driver’s car, pushing it into a safe spot in the traffic, which throws your car out of control, killing you. This option saves lives, but still kills you. Personally, I wouldn't buy a car if it might choose to kill me, even if it had a REALLY good reason. Are there any other “good reasons” to kill me?

- Option three is to do nothing, and let the drunk driver hit you. You die, the drunk driver dies and so do the kids. Five fatalities. If a robot cannot take any action to kill a human, does it mean that it isn't allowed to take any action, and has to just accept getting hit by the drunk driver?

In the real world, there are sometimes no good options. But as humans, do even agree which of these options is the “least bad”? If not, just how do we tell robot’s to act? The military knows about the ethics of killing. Soldiers are taught rules about when they can and cannot kill. Military courts examine questionable decisions, and further define what is and is not allowed.

Once we armed drones, we changed the rules about not harming a human. A remote operator is supposed to “pull the trigger” when someone is killed, but if the drone loses communication with the operator, it can autonomously identify a target and independently make a kill. One report tells us that drones authorized to kill 41 approved targets, killed a total of 1,147 people. We don't know which kills were controlled by operators and which were initiated by drones. But... robots are killing people. Not because they have rebelled, but because it's what they were built to do.

On Land, they track you

In the Sky, they watch you

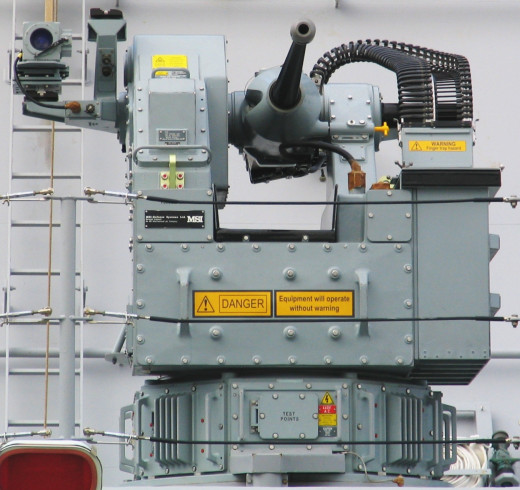

On the Sea, they'll shoot you

When will the robots take over?

So, we built killer robots. Next, we’re going to build a whole lot more of them! Not just flying drones, we will see walking, talking robots that look increasingly like people. This generation of robots will need to interact with people… civilians… and will need to behave like humans to fit in. The US Army has plans to dramatically reduce the number of soldiers (by at least 100,000), as well as support personnel… cooks, janitors, car drivers, mechanics. To accomplish this, they MUST add thousands of robots.

Humanoid robots will not just carry guns, they will man checkpoints, serve food to soldiers, check for bombs, retrieve soldiers from the battlefield to hospitals and provide interpretation services. The skills they develop on the battlefield will then translate into new jobs back home. Civilian robots may be a different model, but the software will just move over. Battlefield medics become paramedics back home. Oh… and most of them will look a lot like Ultron. Maybe a bit less evil.

But humans are still in charge, right? Yes, but not forever. The renowned astronomer, Neil deGrassi Tyson, raised a point about how intelligence works. He said, that we should compare a chimpanzee to a human. Our DNA, which tells us how our bodies are built, only differs by 1%. Yet, our intelligence, and the resulting capabilities, are very different. Humans can write symphonies, build spaceships and perform brain surgery. Chimps can use simple tools, such as sticks to get termites out of the ground, but they haven’t developed writing or language.

With the help of a more advanced species (humans), the most intelligent chimps can have their intelligence be “pushed”, to understand sign language and writing. No chimp has written a great novel, but their language capacity does reach that of a young child. If we ever meet aliens from another planet, they might be almost… but not exactly… the same as humans. But our slight difference in DNA might mean that alien infants understand concepts that only the most brilliant human adults will ever understand, and that alien adults understand ideas that a human can never grasp. Aliens with a tiny advantage in evolution, might have intelligence beyond our comprehension.

Not every astronaut is a rocket scientist!

How quickly is Artificial Intelligence developing?

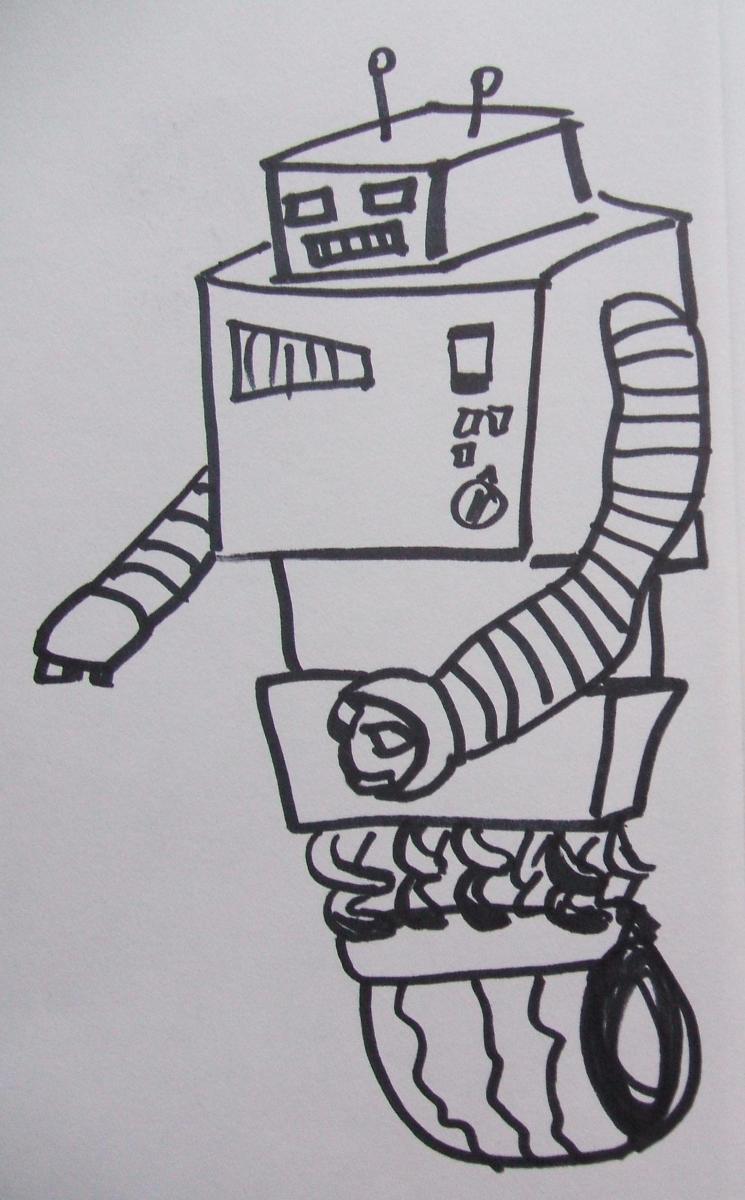

Human intelligence took millions of years to evolve. Computers and A.I. have been around for less than one hundred years. While human intelligence is still superior, it is expected that It is expected that A.I.’s will reach the level of human intelligence in 20-30 years. But A.I.s will not stop evolving. Every two years, the capabilities of an A.I. will double. This happens because of a law of economics, called “Moore’s Law”. This law was developed by analyzing the cost of computers from the 1960s to today. Higher capacity and faster equipment is constantly replacing older, slower, more expensive equipment.

Consider the last computer you bought, the next computer will be more powerful and cost less. If you look at a longer period of time, Moore’s Law starts to may staggering dividends. In just 10 years, computers become 500 times as powerful. That how we moved from desktop computers, to laptops to smart phones that are not only more capable than everything that came before them, but cost less and fit in your pocket. Imagine this same rate of change for intelligence. If you and a robot co-worker had equivalent intelligence, in a years, the robot would have upgraded to super intelligence. Would humans ever get promoted to managers again?

On February 10, 1996, Garry Kasparov, the world's highest rated chess player, was beaten by IBM's Deep Blue computer system

Are super intelligent robots inevitable?

Why haven't super intelligent robots already taken over? The reason is that people design hardware, but robots manufacture it. And they manufacture it with incredible efficiency, following Moore’s law. However, software is designed and written by humans. We make far more mistakes and we do't improve very quickly. Also, as our software gets more complex, it takes more effort to fix programming mistakes. Humans need to think of a computer instruction in terms of human language, and then translate this into computer language. We make a lot of mistakes when we do this. When there are multiple programmers on a project, and there are sometimes thousands of programmers, each programmer write code differently, making the code even more error prone.

Your smartphone is made up of many microchips, each having billions of circuits. Your phone could stop working if a single circuit failed, yet hundreds of millions of these phones are in operation. Software is made up of thousands to millions of lines of code; much of this code is faulty or non-functional. As software programs have grown larger, human written programming code becomes ever less efficient, with errors growing faster than new code can be written.

Recently, IBM addressed this problem. They had what they hoped was the world’s most intelligent A.I., WATSON. The team was going to let WATSON play on the game show Jeopardy, against the game's top earning winners. Programmers were making progress in getting WATSON ready, but as the show date got closer, they spent more and more time fixing new bugs from the code they wrote the day before. The answer was to show WATSON how to write its own code, and then WATSON every question every asked on Jeopardy, and the correct answer. Watson would then write new code to understand what made the correct answer right. This process quickly built massive amounts of flawless code, and the A.I. easily beat all of the human players.

Watson, playing on Jeopardy

Will robots take over?

Ultron made a mistake in only seeing two options, the chaotic world of human and an orderly and “just” world of robotic perfection. There is a third option. Instead of robots replacing humans, technology can upgrade us. We’re already pretty far down this road. The wars in Iraq have greatly increased research into prosthetic arms and legs. The newest generation of prosthetics not only incorporates A.I., the latest models are making tentative steps to interface with the human brain, and read out thoughts.

Of course, technology doesn’t need to be invasive. We simply wear smartphones to gain GPS, telecommunication and data capabilities. The keyboard used to be the interface, now voice control (and personal assistance like SIRI) are becoming more efficient. Google Glasses moved the screen from the smart phone to your face, allowing it to augment everything you see, adding heads up displays that tell you about anything you glance at (want to know more about the Brooklyn Bridge, blink here!)

Consider the huge number of elderly and disabled adults that use wheelchairs and scooters. Many would be happy to wear an scaled down Iron Man suit to augment muscles, and fix declining hearing and sight. Perhaps even improving memory and cognition. A.I. will surround us, and become a standard feature in all technology. People and tech will merge together. Perhaps our memories will even blend together and we will be able to experience events that our limited human senses are incapable of experiencing independently.

The ongoing fusion of humanity and technology will benefit mankind, but for a long time, possibly centuries, there will be friction between those who are for this change and those who are against. Today, we can see the early days if this division. In the middle east, we can see factions that want to turn back the clock a thousand years. They’re willing to kill so that everyone lives a “purer” life. Their view may or may not survive into the next century, but there will always be resistance to fundamental change. America, which is very comfortable with the benefits of 20th century technology (including: inoculations against disease, open heart surgery, a clean and safe food supply, flying on a plane, and so on), is having problems keeping up with the rate of change.

Most of us will regularly upgrade ourselves thw the latest technology, or at least the latest that we can afford. But there will be people that choose to stay in the natural world, with all of its limitations, including a short life. When artificial intelligence is ready to use, and robots become a regular part of our lives, our world will be even more unnatural. But it will also be a life that is better, richer and longer than our lives today. At least, that’s my Niccolls worth for today!