Why use an XML sitemap on your website?

Why Use An XML Site Map On Your Website

Every website are concerned that once search engines locate their website they locate every single one of the web pages on the website and add these pages to their index. It’s crucial for a website owner that the search engine picks up the Keywords and page description they have loaded into their page content.

It is the existence of these Keywords, within a search engine’s index that helps get the web page displayed within the search engine’s result page. This occurs whenever someone does a search using the same keyword(s) within their search string in a search engine’s - search box.

It’s every website owner’s responsibility to make it as simple as possible for search engine spiders to locate and index every single webpage on their website, whenever search engine spiders visit the website.

A tried and tested way of doing this is to include a Site Map within the website. Normally the site map is accessed via a menu item. Hence both site visitors and search engine spiders can locate the site map easily enough. So let’s take a look at what a site map is. How many types of site map there are? How is a site map created and attached to the website?

What is a site map?

A sitemap is a graphical ( visual ) presentation that lists all the different pages contained within a website.

How many types of site maps are there?

There are two types of sitemaps, HTML ( Hyper Text Markup Language – type ) and XML ( eXtensible Markup Language - type).

The HTML based site map

An HTML sitemap is a graphical presentation that displays hierarchically grouped, lists of hyperlinks belonging to all the pages of a website. It's primarily designed for humans.

By adding an HTML sitemap in your website, site visitors can easily navigate through the website. Additionally, a sitemap of this sort helps in - Sitemap based SEO - because it allows search engine spiders to easily find all the hyperlinks to every page on the website thus avoiding - missed pages.

Missed pages simply mean that these pages will not be in the search engine’s index. Therefore these pages never show up for those who use search engines ( like Google, Bing and Yahoo ) to locate appropriate pages for them to visit.

The XML based site map

An XML sitemap, is basically a list of the different URLs of a website but the list is created using very specific syntax ( i.e. XML ) that all search engines spiders are trained to understand with ease.

Using an XML site map for – Sitemap based SEO - accelerates search engine indexing because an XML site map informs search engine spiders about the different URLs of the website in a language they understand.

Most search engine spiders are trained to look for and identify if an XML based site map exists on a website. If it does its accessed and used immediately by the spider.

A search engine’s spider does not have to visit each page of the website and navigate through the links on the page to understand the website’s architecture. The spider only has to locate XML based site map to see and understand the entire website architecture.

Hence a website that uses an XML based site map for – Sitemap based SEO – would be indexed faster and more accurately and would perhaps rank well in search engines because the website has been thoroughly checked.

The Importance Of Registering Your Sitemap with Google

Google actually assists webmasters in multiple ways. In the past, - Sitemap based SEO - was more of a guessing game. Then Google launched a program called Google Analytics. This program provides valuable data about your site. It reports a ton of useful information including:

- The last time the Googlebot paid a visit to your website

- The keywords ( keyphrases ) people use in searches to locate your site

- Problems in your website that need fixing

And a whole lot more.

If you have not created a Google analytics account for your website you really are missing on a whole lot of very important information about your website offered freely by the biggest search engine in use on the Internet.

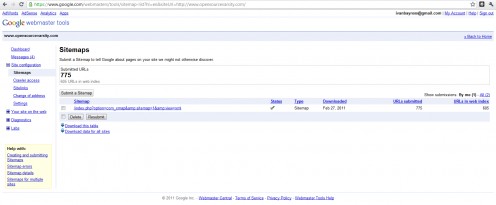

One of the of Google Analytics data entry forms permits a website owner ( webmaster ) to enter the URL that points to their website XML site map. This means that all webmasters have the power to inform Google exactly where their XML site map is to be found. This goes a really long way in ensuring that your website is found and indexed by Google’s spiders.

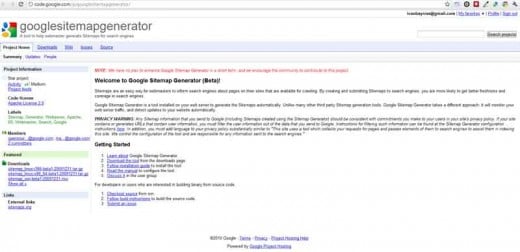

What’s really nice about the using an XML based site map on your website is that search engine spiders other than Google also access and use the same XML site map. There are a lot of tools available on the Internet that create will create an XML based site map for a website – for Free. Do a search in Google and you will be pleasantly surprised at how many there are.

All of these tools essentially do the same thing. Once you’ve given them a website URL they thoroughly scan the website and deliver a properly formatted XML file, normally named sitemap.xml as the output of this exercise. This file must be saved to your local computer. Once done, use FTP and place sitemap.xml in the root directory of your website, which is almost always/public_html.

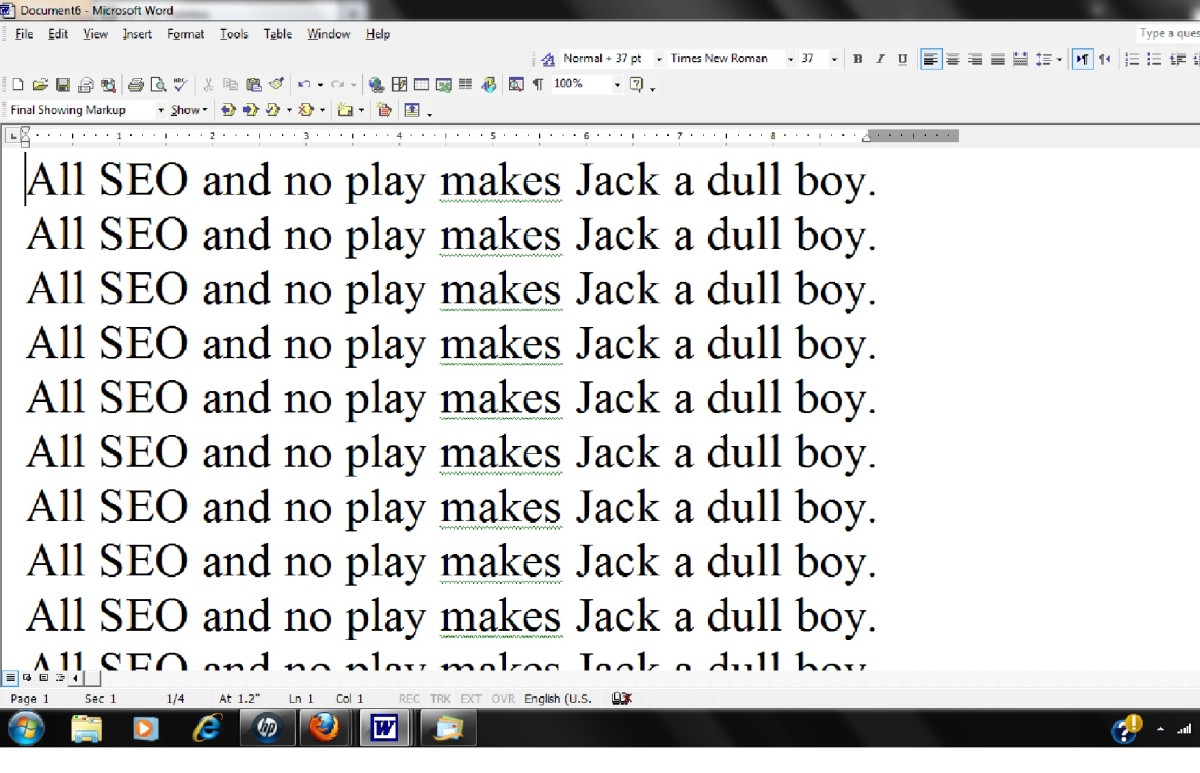

If you are constantly making changes to your website, i.e. adding pages or deleting old pages, then after every such change you need to go back to the XLM based sitemap creation tools and re-create the file sitemap.xml and overwrite the old sitemap.xml file in your website’s root directory. This is because the website architecture has changed and hence the contents of the current sitemap.xml are obsolete.

Using an obsolete sitemap.xml file on a website hurts the website’s ranking in search engines. This is because one or more of the URLs contained within sitemap.xml could point to a nonexistent resource on the website. Hence, it’s pretty important to update sitemap.xml immediately after making any structural change in the website. Not to do so is unwise.

According to Google, XML site map optimization is specifically helpful for the following reasons:

Your website contains dynamic content

Your website is new and doesn't have links that point to it. Since the spiders look at the inbound and outbound links during the crawling process, your site may not be scanned if it hardly has links that lead to it

If your website has pages that Googlebot cannot easily discover, e.g. pages with Rich AJAX or images.

Your site contains a huge archive of webpages that are not well interlinked to each other, or not linked at all

Do take a look at diagram 1 and diagram 2 below.

Ivan Bayross

Open source tutorials | open source training