SEO: Cloaking Can Be Ethical

Short Recap

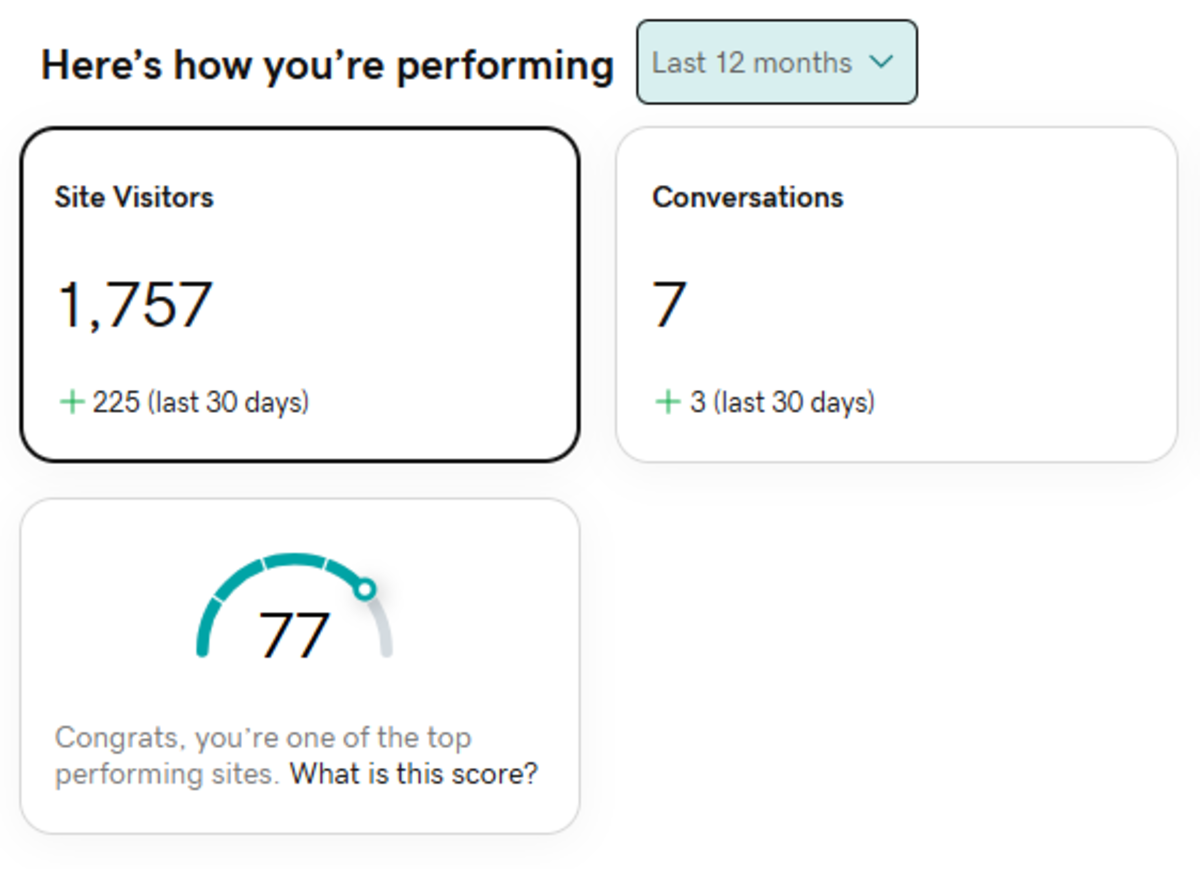

Search Engine Optimization is a practice that exists as it maintains its evolution with the internet. "Cloaking" is one practice in SEO that has been debatable for some time until now. Perhaps there should be another term for "cloaking" as the current leaves a negative impression. Cloaking is simply displaying data with a different presentation and it should not be considered as being a bad habit. The said practice is simply to optimize your information for whatever device that reads the actual content. Examples of these devices are standard browsers, text-only browsers, WAP browsers, other deprecated browsers, hand-held devices, generic APIs, custom data-listening protocols, screen readers, scrape bots, data crawlers, and spiders from the search engine itself. Now, SEO is becoming a broader technology and topic to deal. It may combine itself or totally separate from SMO or Social Media Optimization, which emerged after some social platforms become relevant to the internet due to the large number of users.

Optimized Versus Another Content

Cloaking is mostly used in a web page that expects itself to be read by a vast number of unique devices concerning its purpose. If you optimize your web page accordingly, for example, by removing layout tags or some images to comply with a specific device user-agent but you tend to produce the same content, it should not be referred to as cloaking. Since cloaking has its negative connotation and is practically a black hat method since it is also being used to present a totally different content rather than the real content. Displaying optimized content is different from displaying another content. As of this writing, I am aware of the practice of hiding content from users but not from spiders or crawlers. As an example, a module for a popular forum software has been created to do such work where a bot, can be spider or crawler, is considered a registered member of the forums and is automatically logged in once the module detects it is reading some of its web pages. This way, the bot, even unregistered, can enter, view and scrape the forums, articles, posts, profiles and comments that a non-registered user is not capable of doing. But since the content is hidden from non-registered users, it should not be referred to as "cloaking" because of the fact that the page is just optimized and should be available for registered and logged in members. Again, to push my point, content is not hidden. You just need to register and log in so you can view the content reserved for registered users.

Conclusion

My conclusion is that you can ethically present an optimized page version to each user-agent and devices that you care about. By actually providing the same content, it should not matter. This would remain right as long as you do not present another content in another device as it may pose confusion to some readers. This is true as it might contain a different data or a totally different point of view. Optimization or cloaking, for as long as you do not confuse your readers, then you are doing a great job.

© 2017 Jonathan Discipulo