How Winning is Done: Data integration to Surmount the Cloud Adoption Challenges

Cloud is undoubtedly the most important aspect of any digital transformation initiative and every big change management project will be incomplete without it. That's why, organizations are squandering huge part of their investment on adopting new cloud based technologies. Despite of investing a lot of blood, sweat and toils, they are facing challenges in getting access to the benefits of cloud adoption. Surely, there are challenges that need to be surmounted and most of them are spawned by differentiated technology architectures. Getting access to the benefits requires more than a Jurrasic Extract, Transform, and Load (ETL), and Enterprise Application Integration (EAI) data integration framework. Entities need to evaluate all challenges and prepare a data integration strategy to Surmount the Cloud Adoption Challenges:

Experts claim that most organizations are undermining integration issues that create friction between cloud and onpremise applications. After moving systems to cloud or adopting cloud based platforms, midsize to large enterprises realize that they still have over 6000 to 7000 legacy systems onpremise. Moving the data from these stove-piped application consumes huge resources. Organizations should embrace a robust data integration model to bolster their cloud initiatives. A model ensuring continuous connectivity can help us in adapting new technologies faster and getting many integration benefits downstream.

Conventional Approaches Loosing the Lusture for Cloud Adoption

Historically, legacy systems were built with very little focus on connectivity and they offered limited points for integration. That’s why they were called as stove-piped applications. They offer limited compatibility with cloud based platforms and SOA architecture. Onboarding a trading partner required complex business logic to be dedicated to his business setup.

Conventional Enterprise State Bus (ESB): A conventional ESB framework was primarily designed to only access and manage applications. The trade offs associated with conventional ESB are that it requires three environments to setup, i.e., development, test, and production. IT intervention is needed to build the framework at every layer. It is not an ideal solution for Salesforce and Workday.

Extract Transform and Load (ETL): The ETL data integration process is generally used for moving data out of a repository for analytical purposes. It uses traditional rows and column approach that has become outdated for SaaS based application dependent on pay as you go models.

Cloud Adoption and Thorny Challenges

Stove-Pieped Applications vs. Cloud Applications: There are fundamental architectural differences between legacy ERP systems and cloud applications like Salesforce.com, Amazon EC2, Oracle, SAP. etc. Additional resources and licenses need to be purchased to support new integration needs. Otherwise, the data onboarding process is halted due to latency issues and they slow down the data exchange.

Architectural Differences Between Stove-piped Vs Cloud Applications

Stove-piped Applications

| Cloud Applications

|

|---|---|

Implement their own user ID

| Use a Centralized Process

|

Anti Pattern Based

| Pattern Based

|

Lack code reusability

| Software Oriented Architecture allows code use capability

|

Software Lacks Scalability

| Scalable Architechture

|

Data Transformation Hurdles: There are data transformation hurdles to cross. New age cloud platforms use advanced data formats like Extended Markup Language (XML) for data storage. However, legacy systems use mostly comma-separated values (CSV) format. Converting the file format from XML to CSV or visa versa becomes a tricky operational challenge.

Data Security: Cybersecuirity becomes a concern when confidential business data, and transactions stored in physical storage are moved to public cloud network. Then there are weak end points in smart monitors, machines, and mobile devices. Conventional approaches don’t promise network monitoring, security audits, and breaches.

Latency Issues: Latency is impacted when legacy data travels through dense API network. Moving data from legacy to legacy system can be easy. But separate workflows need to be built for moving data between cloud and onpremise applications. The network bandwidth of conventional integration approaches don’t support load management.

Best Practices to Succeed With Cloud Adoption

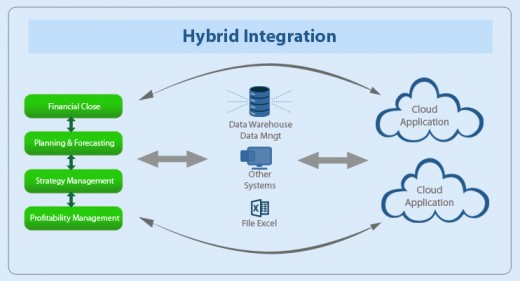

To drive digital transformation application leaders need to drive data integration between hybrid applications. The following guidelines can help you setup a future ready framework that supports new technology adoption.

Setting up a Hybrid Integration Architecture: Application leaders need to understand that every other digital initiative like Big Data, IoT, mobility, etc., will be dependent on cloud migration and it is an important change management initiative. The importance of this project should be explained through a business use case supported by Proof of Concept. Application leaders should bring in other department leaders to understand line of business needs and building a robust hybrid integration strategy. The hybrid architecture must be embedded with self service, 24X7 availability, dynamic scaling, monitoring, and disaster recovery options to support long term business needs.

Embrace a test and implement strategy. Move small chunks of applications first to test the performance and then try moving other bigger ones. Make sure that your integration strategy allows you to:

- Integrate lacs of on-premise systems with next generation platforms like Salesforce, SuccessFactors, etc.

- Align on-premise, mobile, & partner applications and make them work together in tandem

- Enable business users to create workflows and allow IT to focus on governance.

- Reduces Total Cost of Ownership (TCO) and drives profitability.

- Protect sensitive data when it travels through ultra dense APIs.

Tricks to trade: A hybrid integration strategy can help differentiated cloud and onpremise applications to work in tandem with each other. Then there are several factors to set up a Hybrid IT architecture. Read on get your copy of this Whitepaper which includes 6 key factors to consider for setting up a robust hybrid IT architecture.

Customer Data Onboarding: Customer Data Onboarding is an important aspect of partner data exchange. Fast and smooth onboarding in turn depends upon support to storage and data format options. The framework should enable users to bring unstructured, structured data from different sources Amazon S3, OpenStack Swift, etc. and convert it into a comprehensive format.

Data Security: GDPR and other data protection laws are looming and organizations should focus on strengthening data security to avoid damages. End-to-end network monitoring can help organizations in preventing security breaches. Organizations should consider new technologies for future proofing the firewall against threat actors. Make use of centralized security platforms like Webseal or forefront plug-ins across corporate active directory or LDAP. Also prepare a resiliency plan to respond faster to breaches.

Harness More Value Out of Data: Data is not just an asset and there are hidden opportunities inside it. Organizational data inside legacy systems handling lakhs of transactions can be a even more critical corporate asset. It can be used to develop customer journeys and create top of the mind brand recall. Higher level support will be needed to pipe data from legacy systems to Big Data tools and extract greater value out of it.

Accommodating these guidelines in cloud migration strategy can help industry leaders in realizing a lot of business benefits. Set up a research team to reinvent more ways for making the cloud migration process frictionless

The following video tutorial example addresses these overwhelming challenges by enabling organizations to integrate apps with enterprise systems. View this video to know how leading healthcare providers are using enterprise focussed integration solutions to align their messaging systems.